Difference between revisions of "Testbed Update Plan"

| Line 137: | Line 137: | ||

| software raid1 2×500GB disks | | software raid1 2×500GB disks | ||

| CQ9NK2J | | CQ9NK2J | ||

| − | | High Availability, dual power supply | + | | High Availability, dual power supply; precious data; backed up |

|- | |- | ||

| toom | | toom | ||

| Line 148: | Line 148: | ||

| Hardware raid1 2×715GB disks | | Hardware raid1 2×715GB disks | ||

| DC8QG3J | | DC8QG3J | ||

| + | | current Xen 3 hypervisor with mktestbed scripts | ||

|- | |- | ||

| kudde | | kudde | ||

| Line 158: | Line 159: | ||

| Hardware raid1 2×715GB disks | | Hardware raid1 2×715GB disks | ||

| CC8QG3J | | CC8QG3J | ||

| − | | Contains hardware token/robot proxy for vlemed | + | | Contains hardware token/robot proxy for vlemed; current Xen 3 hypervisor with mktestbed scripts |

|- | |- | ||

| span | | span | ||

| Line 169: | Line 170: | ||

| Hardware raid10 on 4×470GB disks (950GB net) | | Hardware raid10 on 4×470GB disks (950GB net) | ||

| FP1BL3J | | FP1BL3J | ||

| − | | DHCP,DNS,NFS,LDAP | + | | DHCP,DNS,NFS,LDAP; home dirs must be moved to bleek; current Xen 3 hypervisor with mktestbed scripts |

|- | |- | ||

| melkbus | | melkbus | ||

| Line 180: | Line 181: | ||

| 2x 320GB SAS disks | | 2x 320GB SAS disks | ||

| 76T974J | | 76T974J | ||

| − | | to be renamed to hals | + | | to be renamed to hals; Oscar's private machine? |

|- | |- | ||

| odin | | odin | ||

| Line 191: | Line 192: | ||

| software raid1 2×500GB disks | | software raid1 2×500GB disks | ||

| 9Q9NK2J | | 9Q9NK2J | ||

| − | | High Availability, dual power supply; to be renamed to kop | + | | High Availability, dual power supply; to be renamed to kop; Oscar's private machine? |

|- | |- | ||

| put | | put | ||

| Line 202: | Line 203: | ||

| | | | ||

| HMXP93J | | HMXP93J | ||

| − | | former garitxako | + | | former garitxako; to-be-NAS box for VM images; |

|- | |- | ||

| ren | | ren | ||

| Line 213: | Line 214: | ||

| | | | ||

| 4NZWF4J | | 4NZWF4J | ||

| − | | former autana | + | | former autana; status unknown |

|- | |- | ||

| mient | | mient | ||

| Line 224: | Line 225: | ||

| | | | ||

| 5NZWF4J | | 5NZWF4J | ||

| − | | former arauca | + | | former arauca; status unknown |

|- | |- | ||

| voor | | voor | ||

| Line 235: | Line 236: | ||

| | | | ||

| 982MY2J | | 982MY2J | ||

| − | | former arrone | + | | former arrone; status unclear; Jan Just? |

|- | |- | ||

| wiers | | wiers | ||

| Line 246: | Line 247: | ||

| | | | ||

| B82MY2J | | B82MY2J | ||

| − | | former aulnes | + | | former aulnes; status unknown |

|- | |- | ||

| ent | | ent | ||

Revision as of 14:15, 12 August 2011

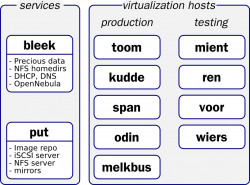

Planning the update of the middleware/development test bed

There is a number of tasks involved in bringing the testbed to where we like to be. We also need to agree on a timeframe in which we like to see these things accomplished.

inventory of current services

This section should list the current services we run and use on the testbeds. For each service, we should explain what we should like to do with it (keep, drop, change?).

| Service | System | keep/move/lose | Comments |

|---|---|---|---|

| LDAP | span | lose | to be discontinued after migration to central LDAP |

| DHCP | span | move | by dnsmasq, /etc/hosts and /etc/ethers. Should migrate elsewhere |

| DHCP | bleek | keep | bleek is going to be the main home directory and DHCP server, after it's been upgraded. |

| Cruisecontrol | bleek | move | build system for VL-e and BiG Grid; may move to Hudson. Currently transferred to cruisecontrol.testbed |

| Hudson | kudde | move | continuous integration, currently for jGridstart but could serve others |

| Home directories | everywhere | move | should be merged onto single NFS server |

| X509 host keys and pre-generated SSH keys | span | move | all in /var/local/hostkeys |

| Robot certificate (etoken) | kudde | keep/move | hardware token is plugged into kudde to generate vlemed robot certificates using cronjob + software in /root/etoken. Token can be moved to another machine but it should remain within the P4CTB |

| VMWare Server 1.0 | bleek | move | If bleek is destined to be home directory server, VMWare should be put on other hardware. |

VMWare on bleek.nikhef.nl

The following machines are all candidate for being scrapped, unless serious objections arise.

drwxr-xr-x 2 dennisvd users 4096 Feb 5 2007 ./poc_test_centos3 drwxr-xr-x 2 dennisvd users 4096 Mar 30 2007 ./CentOS 4 drwxr-xr-x 2 dennisvd users 4096 Apr 10 2007 ./centos3-server drwxr-xr-x 2 dennisvd users 4096 Jun 5 2008 ./Debian Sid drwxrwxr-x 2 dennisvd users 4096 Oct 21 2008 ./poc1 drwxr-xr-x 2 dennisvd users 4096 Oct 21 2008 ./Debian Etch 4.0r0 drwxr-xr-x 2 dennisvd users 4096 Oct 21 2008 ./debian 4 minimal drwxr-xr-x 2 dennisvd users 4096 Oct 21 2008 ./scientific linux 4 drwxr-xr-x 2 dennisvd users 4096 Oct 21 2008 ./CentOS4-Server-i386 drwxr-xr-x 2 dennisvd users 4096 Oct 21 2008 ./CentOS3-i386 drwxr-xr-x 2 dennisvd users 4096 Oct 21 2008 ./tinyCentOS3 drwxr-xr-x 2 msalle users 4096 Jun 10 10:33 ./debian4-64 drwxrwxr-x 2 dennisvd users 4096 Jun 10 10:33 ./vle-poc-r3 drwxr-xr-x 2 janjust users 4096 Sep 28 16:20 ./debian5-64 (saved for Mischa) drwxr-xr-x 2 dennisvd users 4096 Oct 27 18:20 ./cruisecontrol_centos4_i386 (CC no longer uses this) drwxr-xr-x 2 dennisvd users 8192 Oct 28 15:07 ./cruisecontrol_centos4_x86_64 (CC no longer uses this) drwxrwxr-x 2 dennisvd users 4096 Nov 11 11:49 ./ren.nikhef.nl (used by WicoM, can be scrapped)

Besides these, a couple of zip files exists which are packed versions of virtual machine images. These will also disappear unless someone wants to take them home for nostalgic reasons.

-rw-r--r-- 1 dennisvd users 232361772 Aug 1 2007 debian-4-minimal.zip (scrap) -rw-r--r-- 1 janjust users 430972968 Aug 1 2007 gforge-4.5.15-fc3.zip (scrap) -rw-r--r-- 1 janjust users 654212858 Aug 1 2007 lcg27ui.zip (scrap) -rw-r--r-- 1 janjust users 203298842 Aug 1 2007 tinyCentOS-3.8.zip (scrap) -rw-r--r-- 1 janjust users 197921825 Aug 1 2007 tinyCentOS.zip (scrap) -rw-r--r-- 1 janjust users 1249781095 Aug 1 2007 vle-poc-r1-build003.zip (scrap) -rw-r--r-- 1 janjust users 1259094081 Aug 1 2007 vle-poc-r1-build004.zip (scrap) -rw-r--r-- 1 janjust users 1310361793 Aug 1 2007 vle-poc-r1-build005.zip (scrap) -rw-r--r-- 1 janjust users 1341440768 Aug 1 2007 vle-poc-r2-build001.zip (scrap) -rw-r--r-- 1 dennisvd users 647782099 Aug 30 2007 minimal-sl4-apt.zip (scrap) -rw-r--r-- 1 janjust users 803171882 Sep 18 2007 CentOS-3.9-glite-3.0.zip (scrap) -rw-r--r-- 1 janjust users 1386416706 Sep 18 2007 vle-poc-r2-build002.zip (scrap) -rw-r--r-- 1 janjust users 1394177060 Sep 21 2007 tutorial07.zip (scrap) -rw-r--r-- 1 janjust users 659597940 Oct 21 2008 centos4.7-i386.zip (scrap) -rw-r--r-- 1 janjust users 1536737886 Oct 22 2008 vle-poc-r3-build001.zip (scrap) -rw-r--r-- 1 janjust users 1541972232 Oct 22 2008 tutorial08.zip (save, but I already have backup) -rw-r--r-- 1 janjust users 941597293 Nov 11 2008 centos4.7-glite-3.1-wn-i386.zip (scrap)

Data plan for precious data

Precious means anything that took effort to put together, but nothing that lives in version control elsewhere. Think home directories, system configurations, pre-generated ssh host keys, X509 host certs, etc.

One idea is to put all of this on a box that is not involved in regular experimentation and messing about, and have backup arranged from this box to Sara with ADSM (which is the current service running on bleek). After this is arranged we begin to migrate precious data from all the other machines here, leaving the boxen in a state that we don't get sweaty palms over scratching and reinstalling them.

Hardware inventory

Perhaps this should be done first. Knowing what hardware we have is prerequisite to making sensible choices about what we try to run where.

Changes here should probably also go to NDPF System Functions.

| name | ipmi name* | type | chipset | #cores | mem | OS | disk | service tag | remarks |

|---|---|---|---|---|---|---|---|---|---|

| bleek | bleek | PE1950 | Intel 5150 @2.66GHz | 4 | 8GB | CentOS4-64 | software raid1 2×500GB disks | CQ9NK2J | High Availability, dual power supply; precious data; backed up |

| toom | toom | PE1950 | Intel E5440 @2.83GHz | 8 | 16GB | CentOS5-64 | Hardware raid1 2×715GB disks | DC8QG3J | current Xen 3 hypervisor with mktestbed scripts |

| kudde | kudde | PE1950 | Intel E5440 @2.83GHz | 8 | 16GB | CentOS5-64 | Hardware raid1 2×715GB disks | CC8QG3J | Contains hardware token/robot proxy for vlemed; current Xen 3 hypervisor with mktestbed scripts |

| span | span | PE2950 | Intel E5440 @2.83GHz | 8 | 24GB | CentOS5-64 | Hardware raid10 on 4×470GB disks (950GB net) | FP1BL3J | DHCP,DNS,NFS,LDAP; home dirs must be moved to bleek; current Xen 3 hypervisor with mktestbed scripts |

| melkbus | hals | PEM600 | Intel E5450 @3.00GHz | 8 | 32GB | CentOS5-64 | 2x 320GB SAS disks | 76T974J | to be renamed to hals; Oscar's private machine? |

| odin | kop | PE1950 | Intel 5150 @2.66GHz | 4 | 8GB | CentOS5-64 | software raid1 2×500GB disks | 9Q9NK2J | High Availability, dual power supply; to be renamed to kop; Oscar's private machine? |

| put | put | PE2950 | HMXP93J | former garitxako; to-be-NAS box for VM images; | |||||

| ren | blade-14 | PEM610 | 4NZWF4J | former autana; status unknown | |||||

| mient | blade-13 | PEM610 | 5NZWF4J | former arauca; status unknown | |||||

| voor | voor | PE1950 | 982MY2J | former arrone; status unclear; Jan Just? | |||||

| wiers | wiers | PE1950 | B82MY2J | former aulnes; status unknown | |||||

| ent | (no ipmi) | Mac Mini | Intel Core Duo @1.66GHz | 2 | 2GB | OS X 10.6 | SATA 80GB | OS X box (no virtualisation) |

- *ipmi name is used for IPMI access; use

<name>.ipmi.nikhef.nl. - System details such as serial numbers can be retrieved from the command line with

dmidecode -t 1. - The service-tags can be retrieved through IPMI, but unless you want to send raw commands with ipmitool first you need freeipmi-tools. This contains ipmi-oem that can be called thus:

ipmi-oem -h host.ipmi.nikhef.nl -u username -p password dell get-system-info service-tag

IPMI serial-over-LAN

- For details, see Serial Consoles.

- can be done by

ipmitool -I lanplus -H name.ipmi.nikhef.nl -U user sol activate. - SOL access needs to be activated in the BIOS once, by setting console redirection through COM2.

For older systems that do not have a web interface for IPMI, the command-line version can be used. Install the OpenIPMI service so root can use ipmitool. Here is a sample of commands to add a user and give SOL access.

ipmitool user enable 5 ipmitool user set name 5 ctb ipmitool user set password 5 '<blah>' ipmitool channel setaccess 1 5 ipmi=on # make the user administrator (4) on channel 1. ipmitool user priv 5 4 1 ipmitool channel setaccess 1 5 callin=on ipmi=on link=on ipmitool sol payload enable 1 5

Data Migration

Bleek.nikhef.nl is designated to become the home directory server, DHCP server and OpenNebula server. It will be the only persistent machine in the entire testbed, the rest should be considered volatile. It will be the only machine where backups are done. But before all this can be arranged, it needs to be reinstalled with CentOS 5 (currently CentOS 4). All important data and configurations are going to be migrated to span.nikhef.nl as an intermediate step, and after the upgrade this will be moved back and merged on bleek.

Disk space usage on bleek (in kB):

50760 etc 317336 lib 432640 opt 877020 export 1035964 root 2353720 var 3258076 usr 357844380 srv

There is a script in place on span.nikhef.nl to do the backup from bleek, where /etc/rsyncd.conf is already set up.

rsync -a --password-file /etc/rsync-bleek-password --exclude /sys** --exclude /proc** --delete --delete-excluded bleek::export-perm /srv/backup/bleek/

It's not run automatically, so it should be run manually at the very latest right before reinstalling bleek.

Cruisecontrol migration

The former cruisecontrol instance on bleek has been stopped. The service has ben transferred to cruisecontrol.testbed(toom.nikhef.nl), while the data in /srv/project/rpmbuild has been transferred to span.nikhef.nl and is exported from there with NFS.

Network plan

All of the machines should be put in the P4CTB VLAN (vlan 2), which is covered by ACLs to prevent public access. This is a first line in defence against intrusions. In some cases we may like to run virtual machines in the open/experimental network (vlan 8); for that the trick is to create a second bridge with a tagged ethernet device in vlan 8: see /etc/sysconfig/network-scripts/ifcfg-eth0.8

VLAN=yes DEVICE=eth0.8 BOOTPROTO=static ONBOOT=yes TYPE=Ethernet IPV6INIT=no IPV4INIT=no

Then: ifup eth0.8 and

brctl addbr broe brctl addif broe eth0.8

Unfortunately, the IPV6INIT=no doesn't help, it gets an IPv6 address anyway. This bridge can then be used to add virtual network devices for machines that live in open/experimental.

All systems have at least 1GB interface, but put has two which may be trunked. This could be useful for serving machine images. The blade systems have extra interfaces and may be capable of doing iSCSI offloading to the NIC.

TODO: draw a network lay-out.

IPv4 space is limited, and until the network upgrade (planned 2011Q1-3?) we're stuck with that. The current scheme of SNATting may help us out for a while.

LDAP migration

We're going to ditch our own directory service (it served us well, may it rest in peace) in favour of the central Nikhef service. This means changing user ids in some (all?) cases which should be done preferable in a single swell foop.

We should request to add a testbed 'service' to LDAP with ourselves as managers, so we can automatically populate /root/.ssh/authorized_keys.

Here's a simple example of an ldapsearch call to find a certain user.

ldapsearch -x -H ldaps://hooimijt.nikhef.nl/ -b dc=farmnet,dc=nikhef,dc=nl uid=dennisvd

And here is the ldap.conf file to use for ldap authentication.

base dc=farmnet,dc=nikhef,dc=nl timelimit 120 bind_timelimit 120 idle_timelimit 3600 nss_initgroups_ignoreusers root,ldap,named,avahi,haldaemon,dbus,radvd,tomcat,radiusd,news,mailman uri ldaps://gierput.nikhef.nl/ ldaps://hooimijt.nikhef.nl/ ldaps://stalkaars-01.farm.nikhef.nl/ ldaps://stalkaars-03.farm.nikhef.nl/ ldaps://vlaai.nikhef.nl/ ssl on tls_cacertdir /etc/openldap/cacerts

Migration to a cloud infrastructure

This will be based on OpenNebula. Previous testbed cloud experiences are reported here.