Difference between revisions of "Agile testbed"

| (75 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

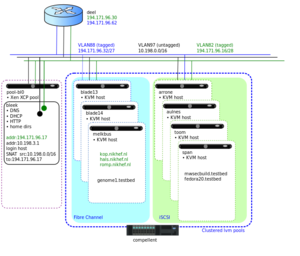

| − | [[Image:P4ctb- | + | [[Image:P4ctb-3.svg|thumb|Diagram of the agile test bed]] |

== Introduction to the Agile testbed == | == Introduction to the Agile testbed == | ||

| − | + | The ''Agile Testbed'' is a collection of virtual machine servers, tuned to quickly setting up virtual machines for testing, certification and experimentation in various configurations. | |

| + | |||

| + | Although the testbed is hosted at the Nikhef data processing facility, it is managed completely separately from the production clusters. This page describes the system and network configurations of the hardware in the testbed, as well as the steps involved to set up new machines. | ||

| + | |||

| + | The testbed is currently managed by Dennis van Dok and Mischa Sall�. | ||

| + | |||

| + | |||

| + | === Getting access === | ||

| + | [[Image:Testbed-access-v2.svg|thumb|Schema of access methods]] | ||

| + | |||

| + | Access to the testbed is either by | ||

| + | # ssh access from '''bleek.nikhef.nl''' or | ||

| + | # IPMI Serial-over-LAN (only for the ''physical'' nodes) | ||

| + | # serial console access from libvirt (only for the ''virtual'' nodes) | ||

| + | |||

| + | The only machine that can be reached with ssh from outside the testbed is the management node '''bleek.nikhef.nl'''. Inbound ssh is restricted to the Nikhef network. The other testbed hardware lives on a LAN with no inbound connectivity. Since bleek also has an interface in this network, you can log on to the other machines from bleek. | ||

| + | |||

| + | Access to bleek.nikhef.nl is restricted to users who have a home directory with their ssh pulic key in ~/.ssh/authorized_keys and an entry in /etc/security/access.conf. | ||

| + | |||

| + | Since all access has to go through bleek, it is convenient to set up ssh to proxy connections to *.testbed through bleek in combination with sharing connections, in ~/.ssh/config: | ||

| + | |||

| + | Host *.testbed | ||

| + | CheckHostIP no | ||

| + | ProxyCommand ssh -q -A bleek.nikhef.nl /usr/bin/nc %h %p 2>/dev/null | ||

| + | |||

| + | Host *.nikhef.nl | ||

| + | ControlMaster auto | ||

| + | ControlPath /tmp/%h-%p-%r.shared | ||

| + | |||

| + | |||

| + | The user identities on bleek are managed in the [[NDPFDirectoryImplementation|Nikhef central LDAP directory]], as is customary on many of the testbed VMs. The home directories are located on bleek and NFS exported to those VMs that wish to use them (but not to the Virtual hosts, who don't need them). | ||

| + | |||

| + | == Operational procedures == | ||

| + | |||

| + | The testbed is not too tightly managed, but here's an attempt to keep our sanity. | ||

| + | |||

| + | === Logging of changes === | ||

| + | |||

| + | All changes need to be communicated by e-mail to [mailto:CTB-changelog@nikhef.nl CTB-changelog@nikhef.nl]. | ||

| + | (This replaces the earlier [[CTB Changelog]].) | ||

| + | |||

| + | If changes affect virtual machines, check if [[Agile testbed/VMs]] and/or [[NDPF System Functions]] need updating. | ||

| + | |||

| + | === creating a new virtual machine === | ||

| + | |||

| + | In summary, a ''new'' virtual machine needs: | ||

| + | # a name | ||

| + | # an ip address | ||

| + | # a mac address | ||

| + | |||

| + | and, optionally, | ||

| + | * a pre-generated ssh host key (highly recommended!) | ||

| + | * a recipe for automated customization | ||

| + | * a [[#Obtaining an SSL host certificate|host key for SSL]] | ||

| + | |||

| + | ==== Preparing the environment ==== | ||

| + | |||

| + | The name/ip address/mac address triplet of machines '''in the ''testbed'' domain''' should be registered in /etc/hosts and /etc/ethers on '''bleek.nikhef.nl'''. The choice of these is free, but take some care: | ||

| + | |||

| + | * '''Check''' that the name doesn't already exist | ||

| + | * '''Check''' that the ip address doesn't already exist | ||

| + | * '''Check''' that the mac address is unique | ||

| + | |||

| + | For machines with '''public IP addresses''', the names and IP addresses are already linked in DNS upstream. Only the mac address needs to be registered. | ||

| + | '''Check''' that the mac address is unique. | ||

| + | |||

| + | After editing, | ||

| + | * '''restart''' dnsmasq | ||

| + | /etc/init.d/dnsmasq restart | ||

| + | |||

| + | Now almost everything is ready to start building a VM. If ssh is to be used later on to log in to the machine (and this is almost '''always''' the case), it is tremendously '''useful''' to have a pre-generated host key (for otherwise each time the machine is re-installed the host key changes, and ssh refuses to log in until you remove the offending key from the known_hosts. This '''will happpen'''). Therefore, run | ||

| + | /usr/local/bin/keygen <hostname> | ||

| + | to pre-generate the ssh keys. | ||

| + | |||

| + | ==== Installing the machine ==== | ||

| + | |||

| + | * '''Choose a VM host''' to starting the installation on. Peruse the [[#Hardware|hardware inventory]] and pick one of the available machines. | ||

| + | * '''Choose a [[#Storage|storage option]]''' for the machine's disk image. | ||

| + | * '''Choose OS, memory and disk space''' as needed. | ||

| + | * '''Figure out''' which network bridge to use to link to as [[#Network|determined by the network]] of the machine. | ||

| + | |||

| + | These choices tie in directly to the parameters given to the virt-install script; the virt-manager GUI comes with a wizard for entering these parameters in a slower, but more friendly manner. Here is a command-line example: | ||

| + | |||

| + | virsh vol-create-as vmachines ''name''.img 10G | ||

| + | virt-install --noautoconsole --name ''name'' --ram 1024 --disk vol=vmachines/''name''.img \ | ||

| + | --arch=i386 --network bridge=br0,mac=52:54:00:xx:xx:xx --os-type=linux \ | ||

| + | --os-variant=rhel5.4 --location http://spiegel.nikhef.nl/mirror/centos/5/os/i386 \ | ||

| + | --extra 'ks=http://bleek.testbed/mktestbed/kickstart/centos5-32-kvm.ks' | ||

| + | |||

| + | A debian installation is similar, but uses preseeding instead of kickstart. The preseed configuration is on bleek. | ||

| + | |||

| + | virt-install --noautoconsole --name debian-builder.testbed --ram 1024 --disk pool=vmachines,size=10 \ | ||

| + | --network bridge=br0,mac=52:54:00:fc:18:dd --os-type linux --os-variant debiansqueeze \ | ||

| + | --location http://ftp.nl.debian.org/debian/dists/squeeze/main/installer-amd64/ \ | ||

| + | --extra 'auto=true priority=critical url=http://bleek.testbed/d-i/squeeze/./preseed.cfg' | ||

| + | |||

| + | The latter example just specifies the storage pool to create an image on the fly, whereas the first pre-generates it. Both should work. | ||

| + | The installation runs unattended with ''noautoconsole'', otherwise a VNC viewer is launched. | ||

| + | |||

| + | * '''Record''' the new machine in [[Agile testbed/VMs]]. | ||

| + | * '''Write''' a changelog entry to [mailto:ctb-changelog@nikhef.nl the ctb-changelog] mailing list. Here is a '''[[Agile testbed/Templates#New machine|template]]''' | ||

| + | |||

| + | A few notes: | ||

| + | * The network autoconfiguration seems to happen too soon; the host bridge configuration doesn't pass the DHCP packets for a while after creating the domain which systematically causes the Debian installer to complain. Fortunately the configuration can be retried from the menu. The second time around the configuration is ok. | ||

| + | * Alternative installation kickstart files are available; e.g. http://bleek.nikhef.nl/mktestbed/kickstart/centos6-64-kvm.ks for CentOS 6. | ||

| + | ** With Debian preseeding, this may be automated by either setting <tt>d-i netcfg/dhcp_options select Retry network autoconfiguration</tt> or <tt>d-i netcfg/dchp_timeout string 60</tt>. | ||

| + | * Sometimes, a storage device is re-used (especially when recreating a domain after removing it '''and''' the associated storage). The re-use may cause the partitioner to see an existing LVM definition and fail, complaining that the partition already exists; you can re-use an existing LVM volume by using the argument: <tt>--disk vol=vmachines/blah</tt>. | ||

| + | |||

| + | === reinstalling a virtual machine === | ||

| + | |||

| + | In summary: | ||

| + | # turn off the running machine | ||

| + | # make libvirt forget it ever existed | ||

| + | # remove the disk image | ||

| + | # start from [[#Installing the machine|installing the machine]] in the procedure [[#creating a new virtual machine|creating a new virtual machine]]. | ||

| + | |||

| + | On the command line: | ||

| + | virsh destroy argus-el5-32.testbed | ||

| + | virsh undefine argus-el5-32.testbed | ||

| + | sleep 1 # give libvirt some time to or the next call may fail | ||

| + | virsh vol-delete /dev/vmachines/argus-el5-32.testbed.img | ||

| + | Followed by virt-install as before. | ||

| + | |||

| + | === importing a VM disk image from another source === | ||

| + | |||

| + | If the machine did not exist in this testbed before, follow the steps to [[#prepare the environment]]. | ||

| + | |||

| + | Depending on the type of disk image, it may need to be converted before it can be used. Xen disk images | ||

| + | can be used straightaway as raw images, but the bus type may need to be set to 'ide'. | ||

| + | |||

| + | * Place the disk image on a storage pool, e.g. put.testbed. | ||

| + | * refresh the pool with virsh pool-refresh put.testbed | ||

| + | |||

| + | Run virt-install (or the GUI wizard). | ||

| + | |||

| + | virt-install --import --disk vol=put.testbed/ref-debian6-64.testbed.img,bus=ide \ | ||

| + | --name ref-debian6-64.testbed --network bridge=br0,mac=00:16:3E:C6:02:0F \ | ||

| + | --os-type linux --os-variant debiansqueeze --ram 1000 | ||

| + | |||

| + | === Migrating a VM to another host === | ||

| + | |||

| + | A VM whose disk image is on shared storage may be migrated to another host, e.g. for load balancing, or because the original host needs a reboot. Migration can be easily done from the virt-manager interface where connections to source and target are open. Take care that '''after the migration''' the definition of the machine '''must be deleted''' to prevent future confusion and collisions. Do ''not'' choose to delete the associated storage, of course. | ||

| + | |||

| + | A changelog entry is '''not''' generally required for a simple migration. | ||

| + | |||

| + | === decommissioning a VM === | ||

| + | |||

| + | |||

| + | === User management === | ||

| + | |||

| + | ==== adding a new user to the testbed ==== | ||

| + | |||

| + | Users are known from their ldap entries. All it takes to allow another user on the testbed is adding their name to | ||

| + | /etc/security/access.conf | ||

| + | on bleek (at least if logging on to bleek is necessary); Adding a home directory on bleek and copying the ssh key of the user to the appropriate file. | ||

| + | |||

| + | Something along these lines (but this is untested): | ||

| + | test -d $NEWUSER || cp -r /etc/skel /user/$NEWUSER | ||

| + | chown -R $NEWUSER:`id -ng $NEWUSER` /user/$NEWUSER | ||

| + | |||

| + | ==== removing a user from the testbed ==== | ||

| + | |||

| + | ==== granting management rights ==== | ||

| + | |||

| + | ==== adding a non-Nikhef user to a single VM ==== | ||

| + | |||

| + | |||

| + | === Obtaining an SSL host certificate === | ||

| + | |||

| + | Host or server SSL certificates for volatile machines in the testbed are kept on span.nikhef.nl:/var/local/hostkeys. The FQDN of the host determines which CA should be used: | ||

| + | * for *.nikhef.nl, the TERENA eScience SSL CA should be used, | ||

| + | * for *.testbed, the testbed CA should be used. | ||

| + | |||

| + | ==== Generating certificate requests for the TERENA eScience SSL CA ==== | ||

| + | |||

| + | * Go to bleek.nikhef.nl:/var/local/hostkeys/pem/ | ||

| + | * Generate a new request by running ../[https://ndpfsvn.nikhef.nl/cgi-bin/viewvc.cgi/pdpsoft/trunk/agiletestbed/make-terena-req.sh?view=co make-terena-req.sh] ''hostname''. This will create a directory for the hostname with the key and request in it. | ||

| + | * Send the resulting newrequest.csr file to the local registrar (Paul or Elly). | ||

| + | * When the certificate file comes back, install it in /var/local/hostkeys/pem/''hostname''/. | ||

| + | |||

| + | ==== Requesting certificates from the testbed CA ==== | ||

| + | |||

| + | There is a cheap 'n easy (and entirely untrustworthy) CA installation on bleek:/srv/ca-testbed/ca/. | ||

| + | The DN is | ||

| + | /C=NL/O=VL-e P4/CN=VL-e P4 testbed CA 2 | ||

| + | and this supersedes the testbed CA that had a key on an eToken (which was more secure but inconvenient). | ||

| + | |||

| + | Generating a new host cert is as easy as | ||

| + | |||

| + | cd /srv/ca-testbed/ca | ||

| + | ./gen-host-cert.sh test.testbed | ||

| + | |||

| + | You must enter the password for the CA key. The resulting certificate and key will be copied to | ||

| + | /var/local/hostkeys/pem/(hostname)/ | ||

| + | |||

| + | The testbed CA files (both the earlier CA as well as the new one) are distributed as rpm and deb package from http://bleek.nikhef.nl/extras. | ||

| + | |||

| + | ==== Automatic configuration of machines ==== | ||

| + | |||

| + | The default kickstart scripts for testbed VMs will download a 'firstboot' script at the end of the install cycle, based on the name they've been given by DHCP. Look in span.nikhef.nl:/usr/local/mktestbed/firstboot for the files that are used, but be aware that these are managed with git (gitosis on span). | ||

| + | |||

| + | === Configuration of LDAP authentication === | ||

| + | |||

| + | ==== Fedora Core 14 ==== | ||

| + | |||

| + | The machine fc14.testbed is configured for LDAP authn against ldap.nikhef.nl. Some notes: | ||

| + | * /etc/nslcd.conf: | ||

| + | uri ldaps://ldap.nikhef.nl ldaps://hooimijt.nikhef.nl | ||

| + | base dc=farmnet,dc=nikhef,dc=nl | ||

| + | ssl on | ||

| + | tls_cacertdir /etc/openldap/cacerts | ||

| + | |||

| + | * /etc/openldap/cacerts is symlinked to /etc/grid-security/certificates. | ||

| + | |||

| + | ==== Debian 'Squeeze' ==== | ||

| + | |||

| + | Debian is a bit different; the nslcd daemon is linked against GnuTLS instead of OpenSSL. Due to a bug (so it would seem) one cannot simply point to a directory of certificates. Debian provides a script to collect all the certificates in one big file. Here is the short short procedure: | ||

| + | |||

| + | mkdir /usr/share/ca-certificates/igtf | ||

| + | for i in /etc/grid-security/certificates/*.0 ; do ln -s $i /usr/share/ca-certificates/igtf/`basename $i`.crt; done | ||

| + | update-ca-certificates | ||

| + | |||

| + | The file /etc/nsswitch.conf needs to include these lines to use ldap: | ||

| + | passwd: compat ldap | ||

| + | group: compat ldap | ||

| + | |||

| + | This file can be used in /etc/nslcd.conf: | ||

| + | uid nslcd | ||

| + | gid nslcd | ||

| + | base dc=farmnet,dc=nikhef,dc=nl | ||

| + | ldap_version 3 | ||

| + | ssl on | ||

| + | uri ldaps://ldap.nikhef.nl ldaps://hooimijt.nikhef.nl | ||

| + | tls_cacertfile /etc/ssl/certs/ca-certificates.crt | ||

| + | timelimit 120 | ||

| + | bind_timelimit 120 | ||

| + | nss_initgroups_ignoreusers root | ||

| + | |||

| + | The libpam-ldap package needs to be installed as well, with the following in /etc/pam_ldap.conf | ||

| + | base dc=farmnet,dc=nikhef,dc=nl | ||

| + | uri ldaps://ldap.nikhef.nl/ ldaps://hooimijt.nikhef.nl/ | ||

| + | ldap_version 3 | ||

| + | pam_password md5 | ||

| + | ssl on | ||

| + | tls_cacertfile /etc/ssl/certs/ca-certificates.crt | ||

| + | |||

| + | At least this seems to work. | ||

| + | |||

| + | == Network == | ||

| + | |||

| + | The testbed machines are connected to three VLANs: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | ! vlan | ||

| + | ! description | ||

| + | ! network | ||

| + | ! gateway | ||

| + | ! bridge name | ||

| + | ! ACL | ||

| + | |- | ||

| + | | 82 | ||

| + | | [[NDPF_System_Functions#P4CTB|P4CTB]] | ||

| + | | 194.171.96.16/28 | ||

| + | | 194.171.96.30 | ||

| + | | br82 | ||

| + | | No inbound traffic on privileged ports | ||

| + | |- | ||

| + | | 88 | ||

| + | | [[NDPF_System_Functions#Nordic (Open_Experimental)|Open/Experimental]] | ||

| + | | 194.171.96.32/27 | ||

| + | | 194.171.96.62 | ||

| + | | br88 | ||

| + | | Open | ||

| + | |- | ||

| + | | 97 (untagged) | ||

| + | | local | ||

| + | | 10.198.0.0/16 | ||

| + | | 10.198.3.1 | ||

| + | | br0 | ||

| + | | testbed only | ||

| + | |- style="color: #999;" | ||

| + | | 84 | ||

| + | | [[NDPF System Functions#MNGT/IPMI|IPMI and management]] | ||

| + | | 172.20.0.0/16 | ||

| + | | 172.20.255.254 | ||

| + | | | ||

| + | | separate management network for IPMI and Serial-Over-Lan | ||

| + | |} | ||

| + | |||

| + | The untagged VLAN is for internal use by the physical machines. The VMs are connected to bridge devices according to their purpose, and groups of VMs are isolated by using nested VLANs (Q-in-Q). | ||

| + | This is an example configuration of /etc/network/interfaces: | ||

| + | |||

| + | # The primary network interface | ||

| + | auto eth0 | ||

| + | iface eth0 inet manual | ||

| + | up ip link set $IFACE mtu 9000 | ||

| + | |||

| + | auto br0 | ||

| + | iface br0 inet dhcp | ||

| + | bridge_ports eth0 | ||

| + | |||

| + | auto eth0.82 | ||

| + | iface eth0.82 inet manual | ||

| + | up ip link set $IFACE mtu 9000 | ||

| + | vlan_raw_device eth0 | ||

| + | |||

| + | auto br2 | ||

| + | iface br2 inet manual | ||

| + | bridge_ports eth0.82 | ||

| + | |||

| + | auto eth0.88 | ||

| + | iface eth0.88 inet manual | ||

| + | vlan_raw_device eth0 | ||

| + | |||

| + | auto br8 | ||

| + | iface br8 inet manual | ||

| + | bridge_ports eth0.88 | ||

| + | |||

| + | auto vlan100 | ||

| + | iface vlan100 inet manual | ||

| + | up ip link set $IFACE mtu 1500 | ||

| + | vlan_raw_device eth0.82 | ||

| + | |||

| + | auto br2_100 | ||

| + | iface br2_100 inet manual | ||

| + | bridge_ports vlan100 | ||

| + | |||

| + | In this example VLAN 82 is configured on the first bridge, br0, and this interface is used in a second bridge, br2; the nested VLAN 82.100 is configured on this brigde, and finally a bridge 2_100 is made. So VMs that are added to this bridge will only receive traffic coming from VLAN 100 nested inside VLAN 2. | ||

| + | |||

| + | |||

| + | === NAT === | ||

| + | |||

| + | The gateway host for the 10.198.0.0/16 range is bleek.testbed (10.198.3.1). It takes care of network address translation (NAT) to outside networks. | ||

| + | |||

| + | # iptables -t nat -L -n | ||

| + | ... | ||

| + | Chain POSTROUTING (policy ACCEPT) | ||

| + | target prot opt source destination | ||

| + | ACCEPT all -- 10.198.0.0/16 10.198.0.0/16 | ||

| + | SNAT all -- 10.198.0.0/16 0.0.0.0/0 to:194.171.96.17 | ||

| + | ... | ||

| + | |||

| + | === Multicast === | ||

| + | |||

| + | The systems for clustered LVM and Ganglia rely on multicast to work. Some out of the box Debian installations end up with a host entry like | ||

| + | |||

| + | 127.0.1.1 arrone.testbed | ||

| + | |||

| + | These should be removed! | ||

| + | |||

| + | == Storage == | ||

| + | |||

| + | The hypervisors of the testbed all connect to the same shared storage backend (a Fujitsu DX200 system called KLAAS) over iSCSI. | ||

| + | The storage backend exports a number of pools to the testbed. These are formatted as LVM groups and shared through a clustered LVM setup. | ||

| + | |||

| + | In libvirt, the VG is known as a 'pool' under the name <code>vmachines</code> (location <code>/dev/p4ctb</code>). | ||

| + | |||

| + | === Clustered LVM setup === | ||

| + | |||

| + | The clustering of nodes is provided by corosync. Here are the contents of the configuration file /etc/corosync/corosync.conf: | ||

| + | totem { | ||

| + | version: 2 | ||

| + | cluster_name: p4ctb | ||

| + | token: 3000 | ||

| + | token_retransmits_before_loss_const: 10 | ||

| + | clear_node_high_bit: yes | ||

| + | crypto_cipher: aes256 | ||

| + | crypto_hash: sha256 | ||

| + | interface { | ||

| + | ringnumber: 0 | ||

| + | bindnetaddr: 10.198.0.0 | ||

| + | mcastport: 5405 | ||

| + | ttl: 1 | ||

| + | } | ||

| + | } | ||

| + | |||

| + | logging { | ||

| + | fileline: off | ||

| + | to_stderr: no | ||

| + | to_logfile: no | ||

| + | to_syslog: yes | ||

| + | syslog_facility: daemon | ||

| + | debug: off | ||

| + | timestamp: on | ||

| + | logger_subsys { | ||

| + | subsys: QUORUM | ||

| + | debug: off | ||

| + | } | ||

| + | } | ||

| + | |||

| + | quorum { | ||

| + | provider: corosync_votequorum | ||

| + | expected_votes: 2 | ||

| + | } | ||

| + | |||

| + | The crypto settings refer to a file /etc/corosync/authkey which must be present on all systems. There is no predefined definition of the cluster, any node can join and that is why the security token is a good idea. You don't want any unexpected members joining the cluster. The quorum of 2 is, of course, because there are only 3 machines at the moment. | ||

| + | |||

| + | As long as the cluster is quorate everything should be fine. That means that at any time, one of the machines can be maintained, rebooted, etc. without affecting the availability of the storage on the other nodes. | ||

| + | |||

| + | As long as at least one node has the cluster up and running, others should be able to join even if the cluster is not quorate. That means that if only a single node out of three is up, the cluster is no longer quorate and storage queries are blocked. But when another node joins the cluster is again quorate and should unblock. | ||

| + | |||

| + | |||

| + | ==== installation ==== | ||

| + | |||

| + | Based on Debian 9. | ||

| + | |||

| + | Install the required packages: | ||

| + | |||

| + | apt-get install corosync clvm | ||

| + | |||

| + | Set up clustered locking in lvm: | ||

| + | |||

| + | sed -i 's/^ locking_type = 1$/ locking_type = 3/' /etc/lvm/lvm.conf | ||

| + | |||

| + | Make sure all nodes have the same corosync.conf file and the same authkey. A key can be generated with corosync-keygen. | ||

| + | |||

| + | ==== Running ==== | ||

| + | |||

| + | Start corosync | ||

| + | |||

| + | systemctl start corosync | ||

| + | |||

| + | Test the cluster status with | ||

| + | |||

| + | corosync-quorumtool -s | ||

| + | dlm_tool -n ls | ||

| + | |||

| + | Should show all nodes. | ||

| + | |||

| + | Start the iscsi daemon | ||

| + | |||

| + | systemctl start iscsid | ||

| + | systemctl start multipathd | ||

| + | |||

| + | See if the iscsi paths are visible. | ||

| + | |||

| + | multipath -ll | ||

| + | 3600000e00d2900000029295000110000 dm-1 FUJITSU,ETERNUS_DXL | ||

| + | size=2.0T features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw | ||

| + | |-+- policy='service-time 0' prio=50 status=active | ||

| + | | |- 6:0:0:1 sdi 8:128 active ready running | ||

| + | | `- 3:0:0:1 sdg 8:96 active ready running | ||

| + | `-+- policy='service-time 0' prio=10 status=enabled | ||

| + | |- 4:0:0:1 sdh 8:112 active ready running | ||

| + | `- 5:0:0:1 sdf 8:80 active ready running | ||

| + | 3600000e00d2900000029295000100000 dm-0 FUJITSU,ETERNUS_DXL | ||

| + | size=2.0T features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw | ||

| + | |-+- policy='service-time 0' prio=50 status=active | ||

| + | | |- 4:0:0:0 sdb 8:16 active ready running | ||

| + | | `- 5:0:0:0 sdc 8:32 active ready running | ||

| + | `-+- policy='service-time 0' prio=10 status=enabled | ||

| + | |- 3:0:0:0 sdd 8:48 active ready running | ||

| + | `- 6:0:0:0 sde 8:64 active ready running | ||

| + | |||

| + | Only then start the clustered lvm. | ||

| + | |||

| + | systemctl start lvm2-cluster-activation.service | ||

| + | |||

| + | |||

| + | ==== Troubleshooting ==== | ||

| + | |||

| + | Cluster log messages are found in /var/log/syslog. | ||

| + | |||

| + | == Services == | ||

| + | |||

| + | There is a number of services being maintained in the testbed, most of them running on '''bleek.nikhef.nl'''. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | ! service | ||

| + | ! host(s) | ||

| + | ! description | ||

| + | |- | ||

| + | |- | ||

| + | | [[#DHCP|DHCP]] | ||

| + | | bleek | ||

| + | | DHCP is part of dnsmasq | ||

| + | |- | ||

| + | | [[#backup|backup]] | ||

| + | | bleek.nikhef.nl | ||

| + | | Backup to Sara with Tivoli Software Manager | ||

| + | |- | ||

| + | | [[#Squid cache|Squid]] | ||

| + | | span.testbed | ||

| + | | An 800MB squid cache, to ease stress on external install servers | ||

| + | |- | ||

| + | | [[#Koji|koji]] | ||

| + | | koji-hub.testbed | ||

| + | | Package build system for Fedora/Red Hat RPMs | ||

| + | |- | ||

| + | | [[#Gitosis|gitosis]] | ||

| + | | bleek.nikhef.nl | ||

| + | | shared git repositories | ||

| + | |- | ||

| + | | DNS | ||

| + | | bleek.testbed | ||

| + | | DNS is part of dnsmasq | ||

| + | |- | ||

| + | | home directories | ||

| + | | bleek | ||

| + | | NFS exported to hosts in vlan2 and vlan17 | ||

| + | |- | ||

| + | | X509 host keys and pre-generated ssh keys | ||

| + | | bleek | ||

| + | | NFS exported directory /var/local/hostkeys | ||

| + | |- | ||

| + | | kickstart | ||

| + | | bleek | ||

| + | | | ||

| + | |- | ||

| + | | preseed | ||

| + | | bleek | ||

| + | | | ||

| + | |- | ||

| + | | firstboot | ||

| + | | bleek | ||

| + | |- | ||

| + | | ganglia | ||

| + | | bleek | ||

| + | | Multicast 239.1.1.9; see the Ganglia [http://ploeg.nikhef.nl/ganglia/?c=Experimental web interface] on ploeg. | ||

| + | |- | ||

| + | | Nagios | ||

| + | | bleek | ||

| + | | https://bleek.nikhef.nl:8444/nagios/ (requires authorisation) | ||

| + | |} | ||

| + | |||

| + | |||

| + | === Backup === | ||

| − | The '' | + | The home directories and other precious data on bleek are backed up to SurfSARA with the Tivoli Software Manager system. To interact with the system, run the dsmj tool. |

| + | A home-brew init.d script in /etc/init.d/adsm starts the service. The key is kept in /etc/adsm/TSM.PWD. The backup logs to /var/log/dsmsched.log, and this log is rotated automatically as configured in /opt/tivoli/tsm/client/ba/bin/dsm.sys. | ||

| + | |||

| + | Bleek is also backed up daily to beerput with rsync. | ||

| + | |||

| + | Since bleek has been virtualised the risk of data loss through a disk failure has been greatly reduced. This means that the need to maintain multiple backup strategies is probably not so urgent anymore. | ||

| + | |||

| + | === Squid cache === | ||

| + | |||

| + | The VM host '''span.testbed''' doubles as a squid cache, mainly to relieve stress on the Fedora servers for the nightly builds by koji. | ||

| + | |||

| + | diff /etc/squid/squid.conf{.default,} | ||

| + | 632,633c632,633 | ||

| + | < #acl our_networks src 192.168.1.0/24 192.168.2.0/24 | ||

| + | < #http_access allow our_networks | ||

| + | --- | ||

| + | > acl our_networks src 10.198.0.0/16 194.171.96.16/28 | ||

| + | > http_access allow our_networks | ||

| + | 1786a1787 | ||

| + | > cache_dir diskd /srv/squid 800000 16 256 | ||

| − | + | === Koji === | |

| − | The testbed | + | The virtual machines koji-hub.testbed, koji-builder.testbed and koji-boulder.testbed run automated builds of grid security middleware builds. |

| − | == Hardware | + | == Hardware == |

Changes here should probably also go to [[NDPF System Functions]]. | Changes here should probably also go to [[NDPF System Functions]]. | ||

| Line 26: | Line 571: | ||

! disk | ! disk | ||

! [http://www.dell.com/support/ service tag] | ! [http://www.dell.com/support/ service tag] | ||

| + | ! Fibre Channel | ||

! location | ! location | ||

! remarks | ! remarks | ||

|-style="background-color: #cfc;" | |-style="background-color: #cfc;" | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| blade13 | | blade13 | ||

| bl0-13 | | bl0-13 | ||

| Line 58: | Line 580: | ||

| E5504 @ 2.00GHz | | E5504 @ 2.00GHz | ||

| 2×4 | | 2×4 | ||

| − | | 16GB | + | | align="right"|16GB |

| Debian 6, KVM | | Debian 6, KVM | ||

| 70 GB + 1 TB Fibre Channel (shared) | | 70 GB + 1 TB Fibre Channel (shared) | ||

| 5NZWF4J | | 5NZWF4J | ||

| + | | yes | ||

| C08 blade13 | | C08 blade13 | ||

| | | | ||

| − | |-style="background-color: # | + | |-style="background-color: #cfc;" |

| blade14 | | blade14 | ||

| bl0-14 | | bl0-14 | ||

| Line 70: | Line 593: | ||

| E5504 @ 2.00GHz | | E5504 @ 2.00GHz | ||

| 2×4 | | 2×4 | ||

| − | | 16GB | + | | align="right"|16GB |

| Debian 6, KVM | | Debian 6, KVM | ||

| − | | 70 GB | + | | 70 GB |

| 4NZWF4J | | 4NZWF4J | ||

| − | | C08 | + | | yes |

| + | | C08 blade14 | ||

| | | | ||

| + | |-style="background-color: #cfc;" | ||

| + | | melkbus | ||

| + | | bl0-02 | ||

| + | | PEM600 | ||

| + | | Intel E5450 @3.00GHz | ||

| + | | 2×4 | ||

| + | | align="right"|32GB | ||

| + | | VMWare ESXi | ||

| + | | 2× 320GB SAS disks + 1 TB Fibre Channel (shared) | ||

| + | | 76T974J | ||

| + | | yes | ||

| + | | C08, blade 2 | ||

| + | | | ||

|-style="background-color: #ffc;" | |-style="background-color: #ffc;" | ||

| arrone | | arrone | ||

| Line 82: | Line 619: | ||

| Intel E5320 @ 1.86GHz | | Intel E5320 @ 1.86GHz | ||

| 2×4 | | 2×4 | ||

| − | | 8GB | + | | align="right"|8GB |

| Debian 6, KVM | | Debian 6, KVM | ||

| 70 GB + 400 GB iSCSI (shared) | | 70 GB + 400 GB iSCSI (shared) | ||

| 982MY2J | | 982MY2J | ||

| + | | no | ||

| C10 | | C10 | ||

| − | | | + | | |

|-style="background-color: #ffc;" | |-style="background-color: #ffc;" | ||

| aulnes | | aulnes | ||

| Line 94: | Line 632: | ||

| Intel E5320 @ 1.86GHz | | Intel E5320 @ 1.86GHz | ||

| 2×4 | | 2×4 | ||

| − | | 8GB | + | | align="right"|8GB |

| Debian 6, KVM | | Debian 6, KVM | ||

| 70 GB + 400 GB iSCSI (shared) | | 70 GB + 400 GB iSCSI (shared) | ||

| B82MY2J | | B82MY2J | ||

| + | | no | ||

| C10 | | C10 | ||

| − | | | + | | |

| − | |-style="background-color: # | + | |-style="background-color: #ffc;" |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| toom | | toom | ||

| toom | | toom | ||

| Line 118: | Line 645: | ||

| Intel E5440 @ 2.83GHz | | Intel E5440 @ 2.83GHz | ||

| 2×4 | | 2×4 | ||

| − | | 16GB | + | | align="right"|16GB |

| − | | | + | | Debian 6, KVM |

| Hardware raid1 2×715GB disks | | Hardware raid1 2×715GB disks | ||

| DC8QG3J | | DC8QG3J | ||

| + | | no | ||

| C10 | | C10 | ||

| − | | | + | | |

| − | |-style="color: # | + | |-style="background-color: #ffc;" |

| span | | span | ||

| span | | span | ||

| PE2950 | | PE2950 | ||

| Intel E5440 @ 2.83GHz | | Intel E5440 @ 2.83GHz | ||

| − | | | + | | 2×4 |

| − | | 24GB | + | | align="right"|24GB |

| − | | | + | | Debian 6, KVM |

| Hardware raid10 on 4×470GB disks (950GB net) | | Hardware raid10 on 4×470GB disks (950GB net) | ||

| FP1BL3J | | FP1BL3J | ||

| + | | no | ||

| C10 | | C10 | ||

| − | | | + | | plus [[#Squid|squid proxy]] |

|-style="color: #444;" | |-style="color: #444;" | ||

| kudde | | kudde | ||

| Line 142: | Line 671: | ||

| Intel E5440 @ 2.83GHz | | Intel E5440 @ 2.83GHz | ||

| 2×4 | | 2×4 | ||

| − | | 16GB | + | | align="right"|16GB |

| CentOS 5, Xen | | CentOS 5, Xen | ||

| Hardware raid1 2×715GB disks | | Hardware raid1 2×715GB disks | ||

| Line 148: | Line 677: | ||

| C10 | | C10 | ||

| Contains hardware encryption tokens for robot certificates; managed by Jan Just | | Contains hardware encryption tokens for robot certificates; managed by Jan Just | ||

| − | |- | + | |-style="color: #444;" |

| + | | storage | ||

| + | | put | ||

| + | | PE2950 | ||

| + | | Intel E5150 @ 2.66GHz | ||

| + | | 2×2 | ||

| + | | align="right"|8GB | ||

| + | | FreeNAS 8.3 | ||

| + | | 6× 500 GB SATA, raidz (ZFS) | ||

| + | | HMXP93J | ||

| + | | C03 | ||

| + | | former garitxako | ||

| + | |- style="color: #444;" | ||

| ent | | ent | ||

| — | | — | ||

| Line 154: | Line 695: | ||

| Intel Core Duo @1.66GHz | | Intel Core Duo @1.66GHz | ||

| 2 | | 2 | ||

| − | | 2GB | + | | align="right"|2GB |

| OS X 10.6 | | OS X 10.6 | ||

| SATA 80GB | | SATA 80GB | ||

| — | | — | ||

| + | | no | ||

| C24 | | C24 | ||

| OS X box (no virtualisation) | | OS X box (no virtualisation) | ||

| + | |-style="color: #444;" | ||

| + | | ren | ||

| + | | bleek | ||

| + | | PE1950 | ||

| + | | Intel 5150 @ 2.66GHz | ||

| + | | 2×2 | ||

| + | | align="right"|8GB | ||

| + | | CentOS 5 | ||

| + | | software raid1 2×500GB disks | ||

| + | | 7Q9NK2J | ||

| + | | no | ||

| + | | C10 | ||

| + | | High Availability, dual power supply; former bleek | ||

|} | |} | ||

| Line 169: | Line 724: | ||

ipmi-oem -h host.ipmi.nikhef.nl -u username -p password dell get-system-info service-tag | ipmi-oem -h host.ipmi.nikhef.nl -u username -p password dell get-system-info service-tag | ||

| − | Most machines | + | Most machines run [http://www.debian.org/releases/stable/ Debian wheezy] with [http://www.linux-kvm.org/page/Main_Page KVM] for virtualization, managed by [http://libvirt.org/ libvirt]. |

See [[NDPF_Node_Functions#P4CTB|the official list]] of machines for the most current view. | See [[NDPF_Node_Functions#P4CTB|the official list]] of machines for the most current view. | ||

| + | |||

| + | === Console access to the hardware === | ||

| + | |||

| + | In some cases direct ssh access to the hardware may not work anymore (for instance when the gateway host is down). All machines have been configured to have a serial console that can be accessed through IPMI. | ||

| + | |||

| + | * For details, see [[Serial Consoles]]. The setup for Debian squeeze is [[#Serial over LAN for hardware running Debian|slightly different]]. | ||

| + | * can be done by <code>ipmitool -I lanplus -H name.ipmi.nikhef.nl -U user sol activate</code>. | ||

| + | * SOL access needs to be activated in the BIOS ''once'', by setting console redirection through COM2. | ||

| + | |||

| + | For older systems that do not have a web interface for IPMI, the command-line version can be used. Install the OpenIPMI service so root can use ipmitool. Here is a sample of commands to add a user and give SOL access. | ||

| + | |||

| + | ipmitool user enable 5 | ||

| + | ipmitool user set name 5 ctb | ||

| + | ipmitool user set password 5 '<blah>' | ||

| + | ipmitool channel setaccess 1 5 ipmi=on | ||

| + | # make the user administrator (4) on channel 1. | ||

| + | ipmitool user priv 5 4 1 | ||

| + | ipmitool channel setaccess 1 5 callin=on ipmi=on link=on | ||

| + | ipmitool sol payload enable 1 5 | ||

| + | |||

| + | ==== Serial over LAN for hardware running Debian ==== | ||

| + | |||

| + | On Debian squeeze you need to tell grub2 what to do with the kernel command line in the file /etc/default/grub. Add or uncomment the following settings: | ||

| + | GRUB_CMDLINE_LINUX_DEFAULT="" | ||

| + | GRUB_CMDLINE_LINUX="console=tty0 console=ttyS1,115200n8" | ||

| + | GRUB_TERMINAL=console | ||

| + | GRUB_SERIAL_COMMAND="serial --speed=115200 --unit=1 --word=8 --parity=no --stop=1" | ||

| + | |||

| + | Then run '''update-grub'''. | ||

=== Installing Debian and libvirt on new hardware === | === Installing Debian and libvirt on new hardware === | ||

| Line 240: | Line 824: | ||

This just means qemu could not create the domain! | This just means qemu could not create the domain! | ||

| − | == | + | === Installing Debian on blades with Fiber Channel === |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Although FC support on Debian works fine, using the multipath-tools-boot package is a bit tricky. It will update the initrd to include the multipath libraries and tools, to make it available at boot time. This happened on blade-13; on reboot it was unable to mount the root partition (The message was 'device or resource busy') because the device mapper had somehow taken hold of the SCSI disk. By changing the root=UUID=xxxx stanza in the GRUB menu to root=/dev/dm-2 (this was guess-work) I managed to boot the system. There were probably several remedies to resolve the issue: | |

| + | # rerun update-grub. This should replace the UUID= with a link to /dev/mapper/xxxx-part1 | ||

| + | # blacklist the disk in the device mapper (and running mkinitramfs) | ||

| + | # remove the multipath-tools-boot package altogether. | ||

| − | + | I opted for blacklisting; this is what's in /etc/multipath.conf: | |

| + | blacklist { | ||

| + | wwid 3600508e000000000d6c6de44c0416105 | ||

| + | } | ||

| − | + | == Migration plans to a cloud infrastructure == | |

| − | + | Previous testbed cloud experiences are [[Agile testbed/Cloud|reported here]]. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Currently, using plain libvirt seems to fit most of our needs. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 14:30, 13 February 2023

Introduction to the Agile testbed

The Agile Testbed is a collection of virtual machine servers, tuned to quickly setting up virtual machines for testing, certification and experimentation in various configurations.

Although the testbed is hosted at the Nikhef data processing facility, it is managed completely separately from the production clusters. This page describes the system and network configurations of the hardware in the testbed, as well as the steps involved to set up new machines.

The testbed is currently managed by Dennis van Dok and Mischa Sall�.

Getting access

Access to the testbed is either by

- ssh access from bleek.nikhef.nl or

- IPMI Serial-over-LAN (only for the physical nodes)

- serial console access from libvirt (only for the virtual nodes)

The only machine that can be reached with ssh from outside the testbed is the management node bleek.nikhef.nl. Inbound ssh is restricted to the Nikhef network. The other testbed hardware lives on a LAN with no inbound connectivity. Since bleek also has an interface in this network, you can log on to the other machines from bleek.

Access to bleek.nikhef.nl is restricted to users who have a home directory with their ssh pulic key in ~/.ssh/authorized_keys and an entry in /etc/security/access.conf.

Since all access has to go through bleek, it is convenient to set up ssh to proxy connections to *.testbed through bleek in combination with sharing connections, in ~/.ssh/config:

Host *.testbed CheckHostIP no ProxyCommand ssh -q -A bleek.nikhef.nl /usr/bin/nc %h %p 2>/dev/null Host *.nikhef.nl ControlMaster auto ControlPath /tmp/%h-%p-%r.shared

The user identities on bleek are managed in the Nikhef central LDAP directory, as is customary on many of the testbed VMs. The home directories are located on bleek and NFS exported to those VMs that wish to use them (but not to the Virtual hosts, who don't need them).

Operational procedures

The testbed is not too tightly managed, but here's an attempt to keep our sanity.

Logging of changes

All changes need to be communicated by e-mail to CTB-changelog@nikhef.nl. (This replaces the earlier CTB Changelog.)

If changes affect virtual machines, check if Agile testbed/VMs and/or NDPF System Functions need updating.

creating a new virtual machine

In summary, a new virtual machine needs:

- a name

- an ip address

- a mac address

and, optionally,

- a pre-generated ssh host key (highly recommended!)

- a recipe for automated customization

- a host key for SSL

Preparing the environment

The name/ip address/mac address triplet of machines in the testbed domain should be registered in /etc/hosts and /etc/ethers on bleek.nikhef.nl. The choice of these is free, but take some care:

- Check that the name doesn't already exist

- Check that the ip address doesn't already exist

- Check that the mac address is unique

For machines with public IP addresses, the names and IP addresses are already linked in DNS upstream. Only the mac address needs to be registered. Check that the mac address is unique.

After editing,

- restart dnsmasq

/etc/init.d/dnsmasq restart

Now almost everything is ready to start building a VM. If ssh is to be used later on to log in to the machine (and this is almost always the case), it is tremendously useful to have a pre-generated host key (for otherwise each time the machine is re-installed the host key changes, and ssh refuses to log in until you remove the offending key from the known_hosts. This will happpen). Therefore, run

/usr/local/bin/keygen <hostname>

to pre-generate the ssh keys.

Installing the machine

- Choose a VM host to starting the installation on. Peruse the hardware inventory and pick one of the available machines.

- Choose a storage option for the machine's disk image.

- Choose OS, memory and disk space as needed.

- Figure out which network bridge to use to link to as determined by the network of the machine.

These choices tie in directly to the parameters given to the virt-install script; the virt-manager GUI comes with a wizard for entering these parameters in a slower, but more friendly manner. Here is a command-line example:

virsh vol-create-as vmachines name.img 10G virt-install --noautoconsole --name name --ram 1024 --disk vol=vmachines/name.img \ --arch=i386 --network bridge=br0,mac=52:54:00:xx:xx:xx --os-type=linux \ --os-variant=rhel5.4 --location http://spiegel.nikhef.nl/mirror/centos/5/os/i386 \ --extra 'ks=http://bleek.testbed/mktestbed/kickstart/centos5-32-kvm.ks'

A debian installation is similar, but uses preseeding instead of kickstart. The preseed configuration is on bleek.

virt-install --noautoconsole --name debian-builder.testbed --ram 1024 --disk pool=vmachines,size=10 \

--network bridge=br0,mac=52:54:00:fc:18:dd --os-type linux --os-variant debiansqueeze \

--location http://ftp.nl.debian.org/debian/dists/squeeze/main/installer-amd64/ \

--extra 'auto=true priority=critical url=http://bleek.testbed/d-i/squeeze/./preseed.cfg'

The latter example just specifies the storage pool to create an image on the fly, whereas the first pre-generates it. Both should work. The installation runs unattended with noautoconsole, otherwise a VNC viewer is launched.

- Record the new machine in Agile testbed/VMs.

- Write a changelog entry to the ctb-changelog mailing list. Here is a template

A few notes:

- The network autoconfiguration seems to happen too soon; the host bridge configuration doesn't pass the DHCP packets for a while after creating the domain which systematically causes the Debian installer to complain. Fortunately the configuration can be retried from the menu. The second time around the configuration is ok.

- Alternative installation kickstart files are available; e.g. http://bleek.nikhef.nl/mktestbed/kickstart/centos6-64-kvm.ks for CentOS 6.

- With Debian preseeding, this may be automated by either setting d-i netcfg/dhcp_options select Retry network autoconfiguration or d-i netcfg/dchp_timeout string 60.

- Sometimes, a storage device is re-used (especially when recreating a domain after removing it and the associated storage). The re-use may cause the partitioner to see an existing LVM definition and fail, complaining that the partition already exists; you can re-use an existing LVM volume by using the argument: --disk vol=vmachines/blah.

reinstalling a virtual machine

In summary:

- turn off the running machine

- make libvirt forget it ever existed

- remove the disk image

- start from installing the machine in the procedure creating a new virtual machine.

On the command line:

virsh destroy argus-el5-32.testbed virsh undefine argus-el5-32.testbed sleep 1 # give libvirt some time to or the next call may fail virsh vol-delete /dev/vmachines/argus-el5-32.testbed.img

Followed by virt-install as before.

importing a VM disk image from another source

If the machine did not exist in this testbed before, follow the steps to #prepare the environment.

Depending on the type of disk image, it may need to be converted before it can be used. Xen disk images can be used straightaway as raw images, but the bus type may need to be set to 'ide'.

- Place the disk image on a storage pool, e.g. put.testbed.

- refresh the pool with virsh pool-refresh put.testbed

Run virt-install (or the GUI wizard).

virt-install --import --disk vol=put.testbed/ref-debian6-64.testbed.img,bus=ide \ --name ref-debian6-64.testbed --network bridge=br0,mac=00:16:3E:C6:02:0F \ --os-type linux --os-variant debiansqueeze --ram 1000

Migrating a VM to another host

A VM whose disk image is on shared storage may be migrated to another host, e.g. for load balancing, or because the original host needs a reboot. Migration can be easily done from the virt-manager interface where connections to source and target are open. Take care that after the migration the definition of the machine must be deleted to prevent future confusion and collisions. Do not choose to delete the associated storage, of course.

A changelog entry is not generally required for a simple migration.

decommissioning a VM

User management

adding a new user to the testbed

Users are known from their ldap entries. All it takes to allow another user on the testbed is adding their name to

/etc/security/access.conf

on bleek (at least if logging on to bleek is necessary); Adding a home directory on bleek and copying the ssh key of the user to the appropriate file.

Something along these lines (but this is untested):

test -d $NEWUSER || cp -r /etc/skel /user/$NEWUSER chown -R $NEWUSER:`id -ng $NEWUSER` /user/$NEWUSER

removing a user from the testbed

granting management rights

adding a non-Nikhef user to a single VM

Obtaining an SSL host certificate

Host or server SSL certificates for volatile machines in the testbed are kept on span.nikhef.nl:/var/local/hostkeys. The FQDN of the host determines which CA should be used:

- for *.nikhef.nl, the TERENA eScience SSL CA should be used,

- for *.testbed, the testbed CA should be used.

Generating certificate requests for the TERENA eScience SSL CA

- Go to bleek.nikhef.nl:/var/local/hostkeys/pem/

- Generate a new request by running ../make-terena-req.sh hostname. This will create a directory for the hostname with the key and request in it.

- Send the resulting newrequest.csr file to the local registrar (Paul or Elly).

- When the certificate file comes back, install it in /var/local/hostkeys/pem/hostname/.

Requesting certificates from the testbed CA

There is a cheap 'n easy (and entirely untrustworthy) CA installation on bleek:/srv/ca-testbed/ca/. The DN is

/C=NL/O=VL-e P4/CN=VL-e P4 testbed CA 2

and this supersedes the testbed CA that had a key on an eToken (which was more secure but inconvenient).

Generating a new host cert is as easy as

cd /srv/ca-testbed/ca ./gen-host-cert.sh test.testbed

You must enter the password for the CA key. The resulting certificate and key will be copied to

/var/local/hostkeys/pem/(hostname)/

The testbed CA files (both the earlier CA as well as the new one) are distributed as rpm and deb package from http://bleek.nikhef.nl/extras.

Automatic configuration of machines

The default kickstart scripts for testbed VMs will download a 'firstboot' script at the end of the install cycle, based on the name they've been given by DHCP. Look in span.nikhef.nl:/usr/local/mktestbed/firstboot for the files that are used, but be aware that these are managed with git (gitosis on span).

Configuration of LDAP authentication

Fedora Core 14

The machine fc14.testbed is configured for LDAP authn against ldap.nikhef.nl. Some notes:

- /etc/nslcd.conf:

uri ldaps://ldap.nikhef.nl ldaps://hooimijt.nikhef.nl base dc=farmnet,dc=nikhef,dc=nl ssl on tls_cacertdir /etc/openldap/cacerts

- /etc/openldap/cacerts is symlinked to /etc/grid-security/certificates.

Debian 'Squeeze'

Debian is a bit different; the nslcd daemon is linked against GnuTLS instead of OpenSSL. Due to a bug (so it would seem) one cannot simply point to a directory of certificates. Debian provides a script to collect all the certificates in one big file. Here is the short short procedure:

mkdir /usr/share/ca-certificates/igtf for i in /etc/grid-security/certificates/*.0 ; do ln -s $i /usr/share/ca-certificates/igtf/`basename $i`.crt; done update-ca-certificates

The file /etc/nsswitch.conf needs to include these lines to use ldap:

passwd: compat ldap group: compat ldap

This file can be used in /etc/nslcd.conf:

uid nslcd gid nslcd base dc=farmnet,dc=nikhef,dc=nl ldap_version 3 ssl on uri ldaps://ldap.nikhef.nl ldaps://hooimijt.nikhef.nl tls_cacertfile /etc/ssl/certs/ca-certificates.crt timelimit 120 bind_timelimit 120 nss_initgroups_ignoreusers root

The libpam-ldap package needs to be installed as well, with the following in /etc/pam_ldap.conf

base dc=farmnet,dc=nikhef,dc=nl uri ldaps://ldap.nikhef.nl/ ldaps://hooimijt.nikhef.nl/ ldap_version 3 pam_password md5 ssl on tls_cacertfile /etc/ssl/certs/ca-certificates.crt

At least this seems to work.

Network

The testbed machines are connected to three VLANs:

| vlan | description | network | gateway | bridge name | ACL |

|---|---|---|---|---|---|

| 82 | P4CTB | 194.171.96.16/28 | 194.171.96.30 | br82 | No inbound traffic on privileged ports |

| 88 | Open/Experimental | 194.171.96.32/27 | 194.171.96.62 | br88 | Open |

| 97 (untagged) | local | 10.198.0.0/16 | 10.198.3.1 | br0 | testbed only |

| 84 | IPMI and management | 172.20.0.0/16 | 172.20.255.254 | separate management network for IPMI and Serial-Over-Lan |

The untagged VLAN is for internal use by the physical machines. The VMs are connected to bridge devices according to their purpose, and groups of VMs are isolated by using nested VLANs (Q-in-Q). This is an example configuration of /etc/network/interfaces:

# The primary network interface

auto eth0

iface eth0 inet manual

up ip link set $IFACE mtu 9000

auto br0

iface br0 inet dhcp

bridge_ports eth0

auto eth0.82

iface eth0.82 inet manual

up ip link set $IFACE mtu 9000

vlan_raw_device eth0

auto br2

iface br2 inet manual

bridge_ports eth0.82

auto eth0.88

iface eth0.88 inet manual

vlan_raw_device eth0

auto br8

iface br8 inet manual

bridge_ports eth0.88

auto vlan100

iface vlan100 inet manual

up ip link set $IFACE mtu 1500

vlan_raw_device eth0.82

auto br2_100

iface br2_100 inet manual

bridge_ports vlan100

In this example VLAN 82 is configured on the first bridge, br0, and this interface is used in a second bridge, br2; the nested VLAN 82.100 is configured on this brigde, and finally a bridge 2_100 is made. So VMs that are added to this bridge will only receive traffic coming from VLAN 100 nested inside VLAN 2.

NAT

The gateway host for the 10.198.0.0/16 range is bleek.testbed (10.198.3.1). It takes care of network address translation (NAT) to outside networks.

# iptables -t nat -L -n ... Chain POSTROUTING (policy ACCEPT) target prot opt source destination ACCEPT all -- 10.198.0.0/16 10.198.0.0/16 SNAT all -- 10.198.0.0/16 0.0.0.0/0 to:194.171.96.17 ...

Multicast

The systems for clustered LVM and Ganglia rely on multicast to work. Some out of the box Debian installations end up with a host entry like

127.0.1.1 arrone.testbed

These should be removed!

Storage

The hypervisors of the testbed all connect to the same shared storage backend (a Fujitsu DX200 system called KLAAS) over iSCSI. The storage backend exports a number of pools to the testbed. These are formatted as LVM groups and shared through a clustered LVM setup.

In libvirt, the VG is known as a 'pool' under the name vmachines (location /dev/p4ctb).

Clustered LVM setup

The clustering of nodes is provided by corosync. Here are the contents of the configuration file /etc/corosync/corosync.conf:

totem {

version: 2

cluster_name: p4ctb

token: 3000

token_retransmits_before_loss_const: 10

clear_node_high_bit: yes

crypto_cipher: aes256

crypto_hash: sha256

interface {

ringnumber: 0

bindnetaddr: 10.198.0.0

mcastport: 5405

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: no

to_syslog: yes

syslog_facility: daemon

debug: off

timestamp: on

logger_subsys {

subsys: QUORUM

debug: off

}

}

quorum {

provider: corosync_votequorum

expected_votes: 2

}

The crypto settings refer to a file /etc/corosync/authkey which must be present on all systems. There is no predefined definition of the cluster, any node can join and that is why the security token is a good idea. You don't want any unexpected members joining the cluster. The quorum of 2 is, of course, because there are only 3 machines at the moment.

As long as the cluster is quorate everything should be fine. That means that at any time, one of the machines can be maintained, rebooted, etc. without affecting the availability of the storage on the other nodes.

As long as at least one node has the cluster up and running, others should be able to join even if the cluster is not quorate. That means that if only a single node out of three is up, the cluster is no longer quorate and storage queries are blocked. But when another node joins the cluster is again quorate and should unblock.

installation

Based on Debian 9.

Install the required packages:

apt-get install corosync clvm

Set up clustered locking in lvm:

sed -i 's/^ locking_type = 1$/ locking_type = 3/' /etc/lvm/lvm.conf

Make sure all nodes have the same corosync.conf file and the same authkey. A key can be generated with corosync-keygen.

Running

Start corosync

systemctl start corosync

Test the cluster status with

corosync-quorumtool -s dlm_tool -n ls

Should show all nodes.

Start the iscsi daemon

systemctl start iscsid systemctl start multipathd

See if the iscsi paths are visible.

multipath -ll 3600000e00d2900000029295000110000 dm-1 FUJITSU,ETERNUS_DXL size=2.0T features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw |-+- policy='service-time 0' prio=50 status=active | |- 6:0:0:1 sdi 8:128 active ready running | `- 3:0:0:1 sdg 8:96 active ready running `-+- policy='service-time 0' prio=10 status=enabled |- 4:0:0:1 sdh 8:112 active ready running `- 5:0:0:1 sdf 8:80 active ready running 3600000e00d2900000029295000100000 dm-0 FUJITSU,ETERNUS_DXL size=2.0T features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw |-+- policy='service-time 0' prio=50 status=active | |- 4:0:0:0 sdb 8:16 active ready running | `- 5:0:0:0 sdc 8:32 active ready running `-+- policy='service-time 0' prio=10 status=enabled |- 3:0:0:0 sdd 8:48 active ready running `- 6:0:0:0 sde 8:64 active ready running

Only then start the clustered lvm.

systemctl start lvm2-cluster-activation.service

Troubleshooting

Cluster log messages are found in /var/log/syslog.

Services

There is a number of services being maintained in the testbed, most of them running on bleek.nikhef.nl.

| service | host(s) | description |

|---|---|---|

| DHCP | bleek | DHCP is part of dnsmasq |

| backup | bleek.nikhef.nl | Backup to Sara with Tivoli Software Manager |

| Squid | span.testbed | An 800MB squid cache, to ease stress on external install servers |

| koji | koji-hub.testbed | Package build system for Fedora/Red Hat RPMs |

| gitosis | bleek.nikhef.nl | shared git repositories |

| DNS | bleek.testbed | DNS is part of dnsmasq |

| home directories | bleek | NFS exported to hosts in vlan2 and vlan17 |

| X509 host keys and pre-generated ssh keys | bleek | NFS exported directory /var/local/hostkeys |

| kickstart | bleek | |

| preseed | bleek | |

| firstboot | bleek | |

| ganglia | bleek | Multicast 239.1.1.9; see the Ganglia web interface on ploeg. |

| Nagios | bleek | https://bleek.nikhef.nl:8444/nagios/ (requires authorisation) |

Backup

The home directories and other precious data on bleek are backed up to SurfSARA with the Tivoli Software Manager system. To interact with the system, run the dsmj tool. A home-brew init.d script in /etc/init.d/adsm starts the service. The key is kept in /etc/adsm/TSM.PWD. The backup logs to /var/log/dsmsched.log, and this log is rotated automatically as configured in /opt/tivoli/tsm/client/ba/bin/dsm.sys.

Bleek is also backed up daily to beerput with rsync.

Since bleek has been virtualised the risk of data loss through a disk failure has been greatly reduced. This means that the need to maintain multiple backup strategies is probably not so urgent anymore.

Squid cache

The VM host span.testbed doubles as a squid cache, mainly to relieve stress on the Fedora servers for the nightly builds by koji.

diff /etc/squid/squid.conf{.default,}

632,633c632,633

< #acl our_networks src 192.168.1.0/24 192.168.2.0/24

< #http_access allow our_networks

---

> acl our_networks src 10.198.0.0/16 194.171.96.16/28

> http_access allow our_networks

1786a1787

> cache_dir diskd /srv/squid 800000 16 256

Koji

The virtual machines koji-hub.testbed, koji-builder.testbed and koji-boulder.testbed run automated builds of grid security middleware builds.

Hardware

Changes here should probably also go to NDPF System Functions.

| name | ipmi name* | type | chipset | #cores | mem | OS | disk | service tag | Fibre Channel | location | remarks |

|---|---|---|---|---|---|---|---|---|---|---|---|

| blade13 | bl0-13 | PEM610 | E5504 @ 2.00GHz | 2×4 | 16GB | Debian 6, KVM | 70 GB + 1 TB Fibre Channel (shared) | 5NZWF4J | yes | C08 blade13 | |

| blade14 | bl0-14 | PEM610 | E5504 @ 2.00GHz | 2×4 | 16GB | Debian 6, KVM | 70 GB | 4NZWF4J | yes | C08 blade14 | |

| melkbus | bl0-02 | PEM600 | Intel E5450 @3.00GHz | 2×4 | 32GB | VMWare ESXi | 2× 320GB SAS disks + 1 TB Fibre Channel (shared) | 76T974J | yes | C08, blade 2 | |

| arrone | arrone | PE1950 | Intel E5320 @ 1.86GHz | 2×4 | 8GB | Debian 6, KVM | 70 GB + 400 GB iSCSI (shared) | 982MY2J | no | C10 | |

| aulnes | aulnes | PE1950 | Intel E5320 @ 1.86GHz | 2×4 | 8GB | Debian 6, KVM | 70 GB + 400 GB iSCSI (shared) | B82MY2J | no | C10 | |

| toom | toom | PE1950 | Intel E5440 @ 2.83GHz | 2×4 | 16GB | Debian 6, KVM | Hardware raid1 2×715GB disks | DC8QG3J | no | C10 | |

| span | span | PE2950 | Intel E5440 @ 2.83GHz | 2×4 | 24GB | Debian 6, KVM | Hardware raid10 on 4×470GB disks (950GB net) | FP1BL3J | no | C10 | plus squid proxy |

| kudde | kudde | PE1950 | Intel E5440 @ 2.83GHz | 2×4 | 16GB | CentOS 5, Xen | Hardware raid1 2×715GB disks | CC8QG3J | C10 | Contains hardware encryption tokens for robot certificates; managed by Jan Just | |

| storage | put | PE2950 | Intel E5150 @ 2.66GHz | 2×2 | 8GB | FreeNAS 8.3 | 6× 500 GB SATA, raidz (ZFS) | HMXP93J | C03 | former garitxako | |

| ent | — | Mac Mini | Intel Core Duo @1.66GHz | 2 | 2GB | OS X 10.6 | SATA 80GB | — | no | C24 | OS X box (no virtualisation) |

| ren | bleek | PE1950 | Intel 5150 @ 2.66GHz | 2×2 | 8GB | CentOS 5 | software raid1 2×500GB disks | 7Q9NK2J | no | C10 | High Availability, dual power supply; former bleek |

- *ipmi name is used for IPMI access; use

<name>.ipmi.nikhef.nl. - System details such as serial numbers can be retrieved from the command line with

dmidecode -t 1. - The service-tags can be retrieved through IPMI, but unless you want to send raw commands with ipmitool first you need freeipmi-tools. This contains ipmi-oem that can be called thus:

ipmi-oem -h host.ipmi.nikhef.nl -u username -p password dell get-system-info service-tag

Most machines run Debian wheezy with KVM for virtualization, managed by libvirt.

See the official list of machines for the most current view.

Console access to the hardware

In some cases direct ssh access to the hardware may not work anymore (for instance when the gateway host is down). All machines have been configured to have a serial console that can be accessed through IPMI.

- For details, see Serial Consoles. The setup for Debian squeeze is slightly different.

- can be done by

ipmitool -I lanplus -H name.ipmi.nikhef.nl -U user sol activate. - SOL access needs to be activated in the BIOS once, by setting console redirection through COM2.

For older systems that do not have a web interface for IPMI, the command-line version can be used. Install the OpenIPMI service so root can use ipmitool. Here is a sample of commands to add a user and give SOL access.

ipmitool user enable 5 ipmitool user set name 5 ctb ipmitool user set password 5 '<blah>' ipmitool channel setaccess 1 5 ipmi=on # make the user administrator (4) on channel 1. ipmitool user priv 5 4 1 ipmitool channel setaccess 1 5 callin=on ipmi=on link=on ipmitool sol payload enable 1 5

Serial over LAN for hardware running Debian

On Debian squeeze you need to tell grub2 what to do with the kernel command line in the file /etc/default/grub. Add or uncomment the following settings:

GRUB_CMDLINE_LINUX_DEFAULT="" GRUB_CMDLINE_LINUX="console=tty0 console=ttyS1,115200n8" GRUB_TERMINAL=console GRUB_SERIAL_COMMAND="serial --speed=115200 --unit=1 --word=8 --parity=no --stop=1"

Then run update-grub.

Installing Debian and libvirt on new hardware

Just a few notes. After setting up the basics by adding the hardware address to /etc/dnsmasq.d/pxeboot (see the examples there) and setting up links in /srv/tftpboot/pxelinux.cfg/ to debian6-autoinstall, pxeboot the machine and wait for the installation to complete. Then, set up bridge configurations like so in /etc/network/interfaces:

auto br0

iface br0 inet dhcp

bridge_ports eth0

auto br2

iface br2 inet manual

bridge_ports eth0.2

auto br8

iface br8 inet manual

bridge_ports eth0.8

Install the vlan package:

apt-get install vlan

In /etc/sysctl.conf:

net.ipv6.conf.all.forwarding=1 net.ipv6.conf.all.autoconf = 0 net.ipv6.conf.all.accept_ra = 0

Migrating Xen VMs to KVM

These are a few temporary notes made during the conversion of VMs on toom to blade14.

preparation

- Log in to the VM;

- if this is a paravirtualized machine, install the 'kernel' package in addition to the 'kernel-xen' package or the machine won't run at all.

- Edit /etc/grub to remove the xvc0 console setting.

- Edit /etc/inittab to remove the lone serial console and re-enable the normal ttys.

- Restore /etc/sysconfig/network-scripts/ifcfg-eth0.bkp to start the network (actually this must be done after the migration, strangely).

- Then shut down the machine.

- After shutdown, copy the VM disk image to put:/mnt/put/stampede or (if LVM was used) dd the volume to put.

Non-Xen-VMs are easier to transfer ;-)

create a new machine

Connect to blade14.testbed with virt-manager and start the new machine wizard.

- Use an existing image (the one you just made)

- set a fixed MAC address (found in /root/xen/machine-definition.vm

- set the disk emulation to IDE

- On first boot, intercept the grub menu to choose the non-Xen kernel

- After booting up:

- fix the network (/etc/sysconfig/network-scripts/ifcfg-eth0)

- remove the xen kernels

rpm -q kernel-xen | xargs rpm -e

Command-line example:

virt-install --import --disk vol=put.testbed/cert-debian6-64.testbed.img \ --name cert-debian6-64.testbed --network bridge=br0,mac=00:16:3E:C6:00:16 \ --os-type linux --os-variant debiansqueeze --ram 1024

Or a former RHEL machine:

virt-install --import --disk vol=put.testbed/genome3.testbed.img,bus=ide \ --name genome3.testbed --network bridge=br0,mac=00:16:3E:C6:02:0E \ --os-type linux --os-variant rhel5.4 --ram 800

Mind that if the host goes out-of-memory you get a weird error message like:

libvirtError: internal error process exited while connecting to monitor: char device redirected to /dev/pts/12

This just means qemu could not create the domain!

Installing Debian on blades with Fiber Channel

Although FC support on Debian works fine, using the multipath-tools-boot package is a bit tricky. It will update the initrd to include the multipath libraries and tools, to make it available at boot time. This happened on blade-13; on reboot it was unable to mount the root partition (The message was 'device or resource busy') because the device mapper had somehow taken hold of the SCSI disk. By changing the root=UUID=xxxx stanza in the GRUB menu to root=/dev/dm-2 (this was guess-work) I managed to boot the system. There were probably several remedies to resolve the issue:

- rerun update-grub. This should replace the UUID= with a link to /dev/mapper/xxxx-part1

- blacklist the disk in the device mapper (and running mkinitramfs)

- remove the multipath-tools-boot package altogether.

I opted for blacklisting; this is what's in /etc/multipath.conf:

blacklist {

wwid 3600508e000000000d6c6de44c0416105

}

Migration plans to a cloud infrastructure

Previous testbed cloud experiences are reported here.

Currently, using plain libvirt seems to fit most of our needs.