Difference between revisions of "Master Projects"

(Removed my (Zef) projects since I do not have time for supervision in the last year of my PhD) |

|||

| (258 intermediate revisions by 48 users not shown) | |||

| Line 3: | Line 3: | ||

The following Master thesis research projects are offered at Nikhef. If you are interested in one of these projects, please contact the coordinator listed with the project. | The following Master thesis research projects are offered at Nikhef. If you are interested in one of these projects, please contact the coordinator listed with the project. | ||

| − | == Projects with | + | == Projects with a 2024 start [WORK IN PROGRESS, please look below for older projects] == |

| − | === | + | === ALICE: Search for new physics with 4D tracking at the most sensitive vertex detector at the LHC === |

| − | + | With the newly installed Inner Tracking System consisting fully of monolithic detectors, ALICE is very sensitive to particles with low transverse momenta, more so than ATLAS and CMS. This will be even more so for the ALICE upgrade detector in 2033. This detector could potentially be even more sensitive to longlived particles that leave peculiar tracks such as disappearing or kinked tracks in the tracker by using timing information along a track. In this project you will investigate how timing information in the different tracking layers can improve or even enable a search for new physics beyond the Standard Model in ALICE. If you show a possibility for major improvements, this can have real consequences for the choice of sensors for this ALICE inner tracker upgrade. | |

| − | [ | + | ''Contact: [mailto:jory.sonneveld@nikhef.nl Jory Sonneveld] and [mailto:Panos.Christakoglou@nikhef.nl Panos Christakoglou]'' |

| − | + | === ALICE: Connecting the hot and cold QCD matter by searching for the strongest magnetic field in nature=== | |

| + | In a non-central collision between two Pb ions, with a large value of impact parameter, the charged nucleons that do not participate in the interaction (called spectators) create strong magnetic fields. A back of the envelope calculation using the Biot-Savart law brings the magnitude of this filed close to 10^19Gauss in agreement with state of the art theoretical calculation, making it the strongest magnetic field in nature. The presence of this field could have direct implications in the motion of final state particles. The magnetic field, however, decays rapidly. The decay rate depends on the electric conductivity of the medium which is experimentally poorly constrained. Overall, the presence of the magnetic field, the main goal of this project, is so far not confirmed experimentally and can have implications for measurements of gravitational waves emitted from the merger of neutron stars. | ||

| − | === ATLAS: | + | ''Contact: [mailto:Panos.Christakoglou@nikhef.nl Panos Christakoglou]'' |

| + | |||

| + | === ALICE/LHCb Tracking: Innovative tracking techniques exploting modern heterogeneous architectures=== | ||

| + | The recostruction of charged particle tracks is one of the most computationaly demanding components of modern high energy physics experiments. In particular, the upcoming High-Luminosity Large Hadron Collider (HL-LHC) makes the usage of fast tracking algorithms using modern computing architectures with many cores and accelerators essential. In this project we will be investigating innovative, machine learning, experiment agnostic tracking algorithms in modern architectures e.g. GPUs, FPGAs. | ||

| + | |||

| + | ''Contact: [mailto:jdevries@nikhef.nl Jacco de Vries] and [mailto:Panos.Christakoglou@nikhef.nl Panos Christakoglou]'' | ||

| + | |||

| + | === ATLAS: Search for very rare Higgs decays to second-generation fermions === | ||

| + | While the Higgs boson coupling to fermions of the third generation has been established experimentally, the investigation of the Higgs boson coupling to the light fermions of the second generation will be a central project for the current data-taking period of the LHC (2022-2025). The Higgs boson decay to muons is the most sensitive channel for probing this coupling. In this project, event selection algorithms for Higgs boson decays to muons in the associated production with a gauge boson (VH) are developed with the aim to distinguish signal events from background processes like Drell-Yan and WZ boson production. For this purpose, the candidate will implement and validate deep learning algorithms, and extract the final results based on fit to the output of the deep learning classifier. | ||

| − | + | ''Contact: [mailto:oliver.rieger@nikhef.nl Oliver Rieger] and [mailto:verkerke@nikhef.nl Wouter Verkerke]'' | |

| − | + | === ATLAS: Advanced deep-learning techniques for lepton identification === | |

| + | The ATLAS experiment at the Large Hadron Collider facilitates a broad spectrum of physics analyses. A critical aspect of these analyses is the efficient and accurate identification of leptons, which are crucial for both signal detection and background event rejection. The ability to distinguish between prompt leptons, arising directly from the collision, and nonprompt leptons, originating from heavy flavour hadron decays, is a challenging task. This project aims to develop and implement advanced techniques based on deep learning models to leverage the lepton identification beyond the capabilities of current standard methods. | ||

| − | ''Contact: [mailto: | + | ''Contact: [mailto:oliver.rieger@nikhef.nl Oliver Rieger] and [mailto:verkerke@nikhef.nl Wouter Verkerke]'' |

| − | === ATLAS: | + | === ATLAS: Probing CP-violation in the Higgs sector with the ATLAS experiment === |

| + | The Standard Model Effective Field Theory (SMEFT) provides a systematic approach to test the impact of new physics at the energy scale of the LHC through higher-dimensional operators. The scarcity of antimatter in the cosmos arises from the slight differences in the behavior of particles and their antiparticle counterparts, known as CP-violation. The current data-taking period of the LHC is expected to yield a comprehensive dataset, enabling the investigation of CP-odd SMEFT operators in the Higgs boson's interactions with other particles.The interpretation of experimental data using SMEFT requires a particular interest in solving complex technical challenges, advanced statistical techniques, and a deep understanding of particle physics. | ||

| − | + | ''Contact: [mailto:lbrenner@nikhef.nl Lydia Brenner], [mailto:oliver.rieger@nikhef.nl Oliver Rieger] and [mailto:verkerke@nikhef.nl Wouter Verkerke]'' | |

| − | + | === ATLAS: Signal and background sensitivity in Standard Model Effective Field Theory (SMEFT) === | |

| + | Complex statistical combinations of large sectors of the ATLAS scientific program are currently being used to obtain the best experimental sensitivity to SMEFT parameters. However, to achieve a fully consistent investigation of SMEFT and to push the limit of what is possible with the data already collected it is needed to include background modifications effects. Joining our efforts in this topic means contributing to a cutting-edge investigation that requires both a particular motivation in solving complex technical challenges and into obtaining a broad knowledge of experimental particle physics. | ||

| − | '' | + | Contact: ''[mailto:avisibil@nikhef.nl Andrea Visibile] and [mailto:lbrenner@nikhef.nl Lydia Brenner]'' |

| − | === | + | === ATLAS: Performing a Bell test in Higgs to di-boson decays === |

| − | + | Recently, theorist [1] have proposed to perform a Bell test in Higgs to di-boson decays. This is a fundamental test of not only quantum mechanics but also a test of quantum field theory using the elusive scalar Higgs particle. At Nikhef we started to brainstorm on the experimental aspects of this challenging measurement. Due to the studies of a PhD student [2] we have considerable experience in the reconstruction of Higgs rest frame angles that are essential to perform a Bell test. Is there a master student who wants to join our efforts to study the ''"spooky action at a distance"'' in Higgs to WW decays? | |

| − | |||

| − | This | ||

| − | |||

| − | |||

| − | ''Contact: [mailto: | + | ''Contact: [mailto:Peter.Kluit@nikhef.nl Peter Kluit]'' |

| − | + | [1] Review article <nowiki>https://arxiv.org/pdf/2402.07972.pdf</nowiki> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [2] <nowiki>https://www.nikhef.nl/pub/services/biblio/theses_pdf/thesis_R_Aben.pdf</nowiki> | |

| − | === | + | === ATLAS: A new timing detector - the HGTD === |

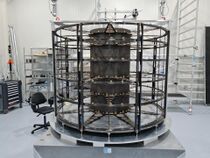

| − | The | + | The ATLAS is going to get a new ability: a timing detector. This allows us to reconstruct tracks not only in the 3 dimensions of space but adds the ability of measuring very precisely also the time (at picosecond level) at which the particles pass the sensitive layers of the HGTD detector. The added information helps to construct the trajectories of the particles created at the LHC in 4 dimensions and ultimately will lead to a better reconstruction of physics at ATLAS. The new HGTD detector is still in construction and work needs to be done on different levels such as understanding the detector response (taking measurements in the lab and performing simulations) or developing algorithms to reconstruct the particle trajectories (programming and analysis work). |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | '' | + | '''Several projects are available within the context of the new HGTD detector:''' |

| + | # One can choose to either focus on '''''the impact on physics analysis performance''''' by studying how the timing measurements can be included in the reconstruction of tracks, and what effect this has on how much better we can understand the physical processes occurring in the particles produced in the LHC collisions. With this work you will be part of the Atlas group at Nikhef. | ||

| + | # The second possibility is to '''''test the sensors in our lab''''' and in test-beam setups at CERN/DESY. The analysis performed will be in context of the ATLAS HGTD test beam group in connection to both the Atlas group and the R&D department at Nikhef. | ||

| + | # The third is to contribute in an ongoing effort '''''to precisely simulate/model the silicon avalanche detectors''''' in the Allpix2 framework. There are several models that try to describe the detectors response. The models have depend on operation temperature, field strenghts and radiation damage. We are getting close in being able to model our detector - but not there yet. This work will be within the ATLAS group. | ||

| − | + | Contact: ''[mailto:hella.snoek@nikhef.nl Hella Snoek]'' | |

| − | |||

| − | + | === ATLAS: Studying rare modes of Higgs boson production at the LHC === | |

| + | The Higgs boson is a crucial piece of the Standard Model and its most recently-discovered particle. Studying Higgs boson production and decay at the LHC might hold the key for unlocking new information about the physical laws governing our universe. With the LHC now in its third run, we can also use the enormous amounts of data being collected to study Higgs boson production modes we have not previously been able to access. For instance, we can look at the production of a Higgs boson via the fusion of two vector bosons, accompanied by emission of a photon, with subsequent H->WW decay. This state is experimentally-distinctive and should be accessible to us using the current dataset of the LHC. It is also theoretically-interesting because it probes the Higgs boson’s interaction with W bosons. This exact interaction is a cornerstone of electroweak symmetry breaking, the process by which particles gain mass, so studying it provides a window onto a fundamental part of the Standard Model. This project will study the feasibility of measuring this or another rare Higgs production mode using H->WW decays, providing a chance to be involved in the design of an analysis from the ground up. | ||

| − | + | ''Contact: [mailto:rhayes@nikhef.nl Robin Hayes], [mailto:f.dias@nikhef.nl Flavia de Almeida Dias]'' | |

| − | |||

| − | + | === ATLAS: Exploring triboson polarisation in loop-induced processes at the LHC === | |

| + | Spin is a fundamental, quantum mechanical property carried by (most) elementary particles. When high-energy particles scatter, their spin influences how angular momentum is propagated through the processes and ultimately how final-state particles are (geometrically) distributed. Helicity is the projection of the spin vector upon momentum. For example: in the loop-induced process gg > W+W-Z, the angular separation between the various decay products of the W and Z bosons depends on the helicity polarisation of the intermediate W and Z bosons. The aim of this project is to explore helicity polarisation in the multiboson processes, and specifically the gg > WWZ process, at the Large Hadron Collider. This project is in the interface between theory and experiment, and you will work with Monte Carlo generators, analyses design and sensitivity studies. | ||

| − | + | ''Contact: [mailto:f.dias@nikhef.nl Flavia de Almeida Dias]'' | |

| − | |||

| − | + | === ATLAS: High-Performance Simulations for High-Energy Physics Experiments - Multiple Enhancements=== | |

| + | The role of simulation and synthetic data generation for High-Energy Physics (HEP) research is profound. While there are physics-accurate simulation frameworks available to provide the most realistic data syntheses, these tools are slow. Additionally, the output from physics-accurate simulations is closely tied to the experiment that the simulation was developed for and its software. | ||

| − | + | Fast simulation frameworks on the other hand, can drastically simplify the simulation, while still striking a balance between speed and accuracy of the simulated events. The applications of simplified simulations and data are numerous. We will be focusing on the role of such data as an enabler for Machine Learning (ML) model design research. | |

| − | |||

| − | + | This project aims to extend the REDVID simulation framework [1, 2] through addition of new features. The features considered for this iteration include: | |

| − | + | *Interaction with common Monte Carlo event generators: To calculate hit points for imported events | |

| − | + | *Addition of basic magnetic field effect: Simulation of a simplified, uniform magnetic field, affecting charged particle trajectories | |

| + | *Inclusion of pile-up effects during simulation: Multiple particle collisions occurring in close vicinity | ||

| + | *Indication of bunch size | ||

| + | *Spherical coordinates | ||

| + | *Vectorised helical tracks | ||

| + | *Considerations for reproducibility of collision events | ||

| − | + | The project is part of an ongoing effort to train and test ML models for particle track reconstruction for the HL-LHC. The improved version of REDVID can be used by the student and other users to generate training data for ML models. Depending on the progress and the interest, a secondary goal could be to perform comparisons with physics-accurate simulations or to investigate the impact of the new features on developed ML models. | |

| − | '' | + | '''Bonus:''' The student will be encouraged and supported to publish the output of this study in a relevant journal, such as "Data in Brief" by Elsevier. |

| − | === | + | ====Appendix - Terminology==== |

| − | + | The terminology for the considered simulations and its features is domain-specific and are explained below: | |

| − | '' | + | *Synthetic data: Data generated during a simulation, which resembles real-data to limited extent. |

| + | *Physics-accurate simulation: A type of simulation that strongly takes into account real-world physical interactions and utilises physics formulas to achieve this. | ||

| + | *Complexity-aware simulation framework: A simulator which can be configured with different levels of simulation complexity, making the simulation closer or further away from the real-world case. | ||

| + | *Complexity-reduced data set: Simplified data resulting from simplified simulations. This is in comparison to real data, or data generated by physics-accurate simulations. | ||

| + | |||

| + | ==== References==== | ||

| + | [1] U. Odyurt et al., 2023, "Reduced Simulations for High-Energy Physics, a Middle Ground for Data-Driven Physics Research". URL: https://doi.org/10.48550/arXiv.2309.03780 | ||

| + | |||

| + | [2] U. Odyurt, 2023, "REDVID Simulation Framework". URL: https://virtualdetector.com/redvid | ||

| + | |||

| + | Contact: ''[mailto:uodyurt@nikhef.nl dr. ir. Uraz Odyurt], [mailto:roel.aaij@nikhef.nl dr. Roel Aaij]'' | ||

| + | |||

| + | === ATLAS: High-Performance Simulations for High-Energy Physics Experiments - Electron and Muon Simulation (2 projects) === | ||

| + | The role of simulation and synthetic data generation for High-Energy Physics (HEP) research is profound. While there are physics-accurate simulation frameworks available to provide the most realistic data syntheses, these tools are slow. Additionally, the output from physics-accurate simulations is closely tied to the experiment, e.g., fixed detector geometry, that the simulation was developed for and its software. | ||

| + | |||

| + | Fast simulation frameworks on the other hand, can drastically simplify the simulation, while still striking a balance between speed and accuracy of the simulated events. The applications of simplified simulations and data are numerous. We will be focusing on the role of such data as an enabler for Machine Learning (ML) model design research. | ||

| − | + | This project aims to extend the REDVID simulation framework [1, 2] through addition of new features. | |

| − | |||

| − | |||

| − | + | ==== Electron simulation ==== | |

| + | The main feature considered for this iteration is support for different particles, especially electrons. | ||

| − | + | It is paramount to have enough differentiation between different particle types within a simulation. To be able to simulate the behaviour of an electron, certain characteristics have to be implemented, which are as follows: | |

| − | |||

| − | + | * Electrons interact with matter and could emit bremsstrahlung radiation, in turn, leading to generation of secondary particles in the form of showers. | |

| + | * The concept of jets and showers can be designed in the same way within REDVID. This will be an acceptable simplification and boosts code reuse. | ||

| + | * Electrons also lose energy through bremsstrahlung radiation as they go through the matter. This loss of energy can alter the electron's trajectory, causing it to slow down or change direction. | ||

| − | + | There will be a need for dedicated virtual detector segments to act as matter, or the detector sublayer should be considered with thickness, or both. The student will test the impact of the added information on developed ML models, which may involve training/retraining of these models. | |

| − | |||

| − | + | ==== Muon simulation ==== | |

| + | The main feature considered for this iteration is support for different particles, especially muons. | ||

| − | + | It is paramount to have enough differentiation between different particle types within a simulation. To be able to simulate the behaviour of a muon, certain characteristics have to be implemented, which are as follows: | |

| − | |||

| − | + | * Muons are heavy particles and as a result, possess higher penetration power on matter. | |

| + | * Muons are unstable particles and decay into other particles, but not necessarily within the range of the detector. | ||

| + | * Muons interact with matter, which could result in a change of the original direction. | ||

| + | * Muons are charged particles and are affected by magnetic fields, resulting in bent trajectories. The curvature of muon trajectories in magnetic fields reveals information about their momentum. | ||

| + | * Distinguishing muons from other particles, i.e., background signals, is crucial. | ||

| − | + | The student shall study, select and implement a minimum set of distinguishing characteristics to REDVID. A validation step, showcasing the differences in particle behaviour may be required. There may be a need for dedicated virtual detector layers to be defined. The student will test the impact of the added information on developed ML models, which may involve training/retraining of these models. | |

| − | + | The project is part of an ongoing effort to train and test ML models for particle track reconstruction for the HL-LHC. The improved version of REDVID can be used by the student and other users to generate training data for ML models. Depending on the progress and the interest, a secondary goal could be to perform comparisons with physics-accurate simulations or to investigate the impact of the new features on developed ML models. | |

| − | '' | + | '''Bonus:''' The student will be encouraged and supported to publish the output of this study in a relevant journal, such as "Data in Brief" by Elsevier. |

| − | === | + | ====Appendix - Terminology==== |

| − | + | The terminology for the considered simulations and its features is domain-specific and are explained below: | |

| − | + | *Synthetic data: Data generated during a simulation, which resembles real-data to limited extent. | |

| + | *Physics-accurate simulation: A type of simulation that strongly takes into account real-world physical interactions and utilises physics formulas to achieve this. | ||

| + | *Complexity-aware simulation framework: A simulator which can be configured with different levels of simulation complexity, making the simulation closer or further away from the real-world case. | ||

| + | *Complexity-reduced data set: Simplified data resulting from simplified simulations. This is in comparison to real data, or data generated by physics-accurate simulations. | ||

| − | + | ==== References==== | |

| − | + | [1] U. Odyurt et al., 2023, "Reduced Simulations for High-Energy Physics, a Middle Ground for Data-Driven Physics Research". URL: https://doi.org/10.48550/arXiv.2309.03780 | |

| − | === | ||

| − | |||

| − | + | [2] U. Odyurt, 2023, "REDVID Simulation Framework". URL: https://virtualdetector.com/redvid | |

| − | + | [3] T. Sjöstrand et al., 2006, "PYTHIA 6.4 physics and manual". URL: https://doi.org/10.1088/1126- 6708/2006/05/026 | |

| − | |||

| − | |||

| − | '' | + | Contact: ''[mailto:uodyurt@nikhef.nl dr. ir. Uraz Odyurt], [mailto:f.dias@nikhef.nl dr. Flavia de Almeida Dias]'' |

| + | ---- | ||

| − | === Dark Matter: XAMS R&D Setup === | + | === Dark Matter: Building better Dark Matter Detectors - the XAMS R&D Setup === |

| − | The Amsterdam Dark Matter group operates an R&D xenon detector at Nikhef. The detector is a dual-phase xenon time-projection chamber and contains about | + | The Amsterdam Dark Matter group operates an R&D xenon detector at Nikhef. The detector is a dual-phase xenon time-projection chamber and contains about 0.5kg of ultra-pure liquid xenon in the central volume. We use this detector for the development of new detection techniques - such as utilizing our newly installed silicon photomultipliers - and to improve the understanding of the response of liquid xenon to various forms of radiation. The results could be directly used in the XENONnT experiment, the world’s most sensitive direct detection dark matter experiment at the Gran Sasso underground laboratory, or for future Dark Matter experiments like DARWIN. We have several interesting projects for this facility. We are looking for someone who is interested in working in a laboratory on high-tech equipment, modifying the detector, taking data and analyzing the data themselves You will "own" this experiment. |

''Contact: [mailto:decowski@nikhef.nl Patrick Decowski] and [mailto:z37@nikhef.nl Auke Colijn]'' | ''Contact: [mailto:decowski@nikhef.nl Patrick Decowski] and [mailto:z37@nikhef.nl Auke Colijn]'' | ||

| − | === Dark Matter: | + | ===Dark Matter: Searching for Dark Matter Particles - XENONnT Data Analysis=== |

| − | + | The XENON collaboration has used the XENON1T detector to achieve the world’s most sensitive direct detection dark matter results and is currently operating the XENONnT successor experiment. The detectors operate at the Gran Sasso underground laboratory and consist of so-called dual-phase xenon time-projection chambers filled with ultra-pure xenon. Our group has an opening for a motivated MSc student to do analysis with the new data coming from the XENONnT detector. The work will consist of understanding the detector signals and applying a deep neural network to improve the (gas-) background discrimination in our Python-based analysis tool to improve the sensitivity for low-mass dark matter particles. The work will continue a study started by a recent graduate. There will also be opportunity to do data-taking shifts at the Gran Sasso underground laboratory in Italy. | |

''Contact: [mailto:decowski@nikhef.nl Patrick Decowski] and [mailto:z37@nikhef.nl Auke Colijn]'' | ''Contact: [mailto:decowski@nikhef.nl Patrick Decowski] and [mailto:z37@nikhef.nl Auke Colijn]'' | ||

| − | === Dark Matter: | + | ===Dark Matter: Signal reconstruction and correction in XENONnT=== |

| − | + | XENONnT is a low background experiment operating at the INFN - Gran Sasso underground laboratory with the main goal of detecting Dark Matter interactions with xenon target nuclei. The detector, consisting of a dual-phase time projection chamber, is filled with ultra-pure xenon, which acts as a target and detection medium. Understanding the detector's response to various calibration sources is a mandatory step in exploiting the scientific data acquired. This MSc thesis aims to develop new methods to improve the reconstruction and correction of scintillation/ ionization signals from calibration data. The student will work with modern analysis techniques (python-based) and will collaborate with other analysts within the international XENON Collaboration. | |

| − | use of Monte Carlo simulations. | + | |

| + | ''Contact: [mailto:mpierre@nikhef.nl Maxime Pierre], [mailto:decowski@nikhef.nl Patrick Decowski]'' | ||

| + | |||

| + | ===Dark Matter: The Ultimate Dark Matter Experiment - DARWIN Sensitivity Studies=== | ||

| + | DARWIN is the “ultimate” direct detection dark matter experiment, with the goal to reach the so-called “neutrino floor”, when neutrinos become a hard-to-reduce background. The large and exquisitely clean xenon mass will allow DARWIN to also be sensitive to other physics signals such as solar neutrinos, double-beta decay from Xe-136, axions and axion-like particles etc. While the experiment will only start in 2027, we are in the midst of optimizing the experiment, which is driven by simulations. We have an opening for a student to work on the GEANT4 Monte Carlo simulations for DARWIN. We are also working on a “fast simulation” that could be included in this framework. It is your opportunity to steer the optimization of a large and unique experiment. This project requires good programming skills (Python and C++) and data analysis/physics interpretation skills. | ||

| + | |||

| + | ''Contact: [mailto:t.pollmann@nikhef.nl Tina Pollmann], [mailto:decowski@nikhef.nl Patrick Decowski] or [mailto:z37@nikhef.nl Auke Colijn]'' | ||

| + | |||

| + | ===Dark Matter: Exploring new background sources for DARWIN=== | ||

| + | Experiments based on the xenon dual-phase time projection chamber detection technology have already demonstrated their leading role in the search for Dark Matter. The unprecedented low level of background reached by the current generation, such as XENONnT, allows such experiments to be sensitive to new rare-events physics searches, broadening their physics program. The next generation of experiments is already under consideration with the DARWIN observatory, which aims to surpass its predecessors in terms of background level and mass of xenon target. With the increased sensitivity to new physics channels, such as the study of neutrino properties, new sources of backgrounds may arise. This MSc thesis aims to investigate potential new sources of background for DARWIN and is a good opportunity for the student to contribute to the design of the experiment. This project will rely on Monte Carlo simulation tools such as GEANT4 and FLUKA, and good programming skills (Python and C++) are advantageous. | ||

| + | |||

| + | ''Contact: [mailto:mpierre@nikhef.nl Maxime Pierre], [mailto:decowski@nikhef.nl Patrick Decowski]'' | ||

| + | |||

| + | ===Dark Matter: Sensitive tests of wavelength-shifting properties of materials for dark matter detectors=== | ||

| + | Rare event search experiments that look for neutrino and dark matter interactions are performed with highly sensitive detector systems, often relying on scintillators, especially liquid noble gases, to detect particle interactions. Detectors consist of structural materials that are assumed to be optically passive, and light detection systems that use reflectors, light detectors, and sometimes, wavelength-shifting materials. MSc theses are available related to measuring the efficiency of light detection systems that might be used in future detectors. Furthermore, measurements to ensure that presumably passive materials do not fluoresce, at the low level relevant to the detectors, can be done. Part of the thesis work can include Monte Carlo simulations and data analysis for current and upcoming dark matter detectors, to study the effect of different levels of desired and nuisance wavelength shifting. In this project, students will acquire skills in photon detection, wavelength shifting technologies, vacuum systems, UV and extreme-UV optics, detector design, and optionally in Python and C++ programming, data analysis, and Monte Carlo techniques. | ||

| + | |||

| + | ''Contact: [mailto:Tina.Pollmann@tum.de Tina Pollmann]'' | ||

| + | |||

| + | ===Detector R&D: Energy Calibration of hybrid pixel detector with the Timepix4 chip=== | ||

| + | The Large Hadron Collider at CERN will increase its luminosity in the coming years. For the LHCb experiment the number of collisions per bunch crossing increases from 7 to more than 40. To distinguish all tracks from the quasi simultaneous collisions, time information will have to be used in addition to spatial information. A big step on the way to fast silicon detectors is the recently developed Timepix4 ASIC. Timepix4 consist of 448x512 pixels, but the pixels are not identical and there are pixel to pixel fluctuations in the time and charge measurement. The ultimate time resolution can only be achieved after calibration of both the time and energy measurements. | ||

| + | The goal of this project is to study the energy calibration of Timepix4. Typical research questions are: how does the resolution depend on threshold and Krummenacher (discharge) current, and does a different sensor affect the energy resolution? In this research you will do measurements with calibration pulses, lasers and with radio-active sources to obtain data to calibrate the detector. The work consist of hands-on work in the lab to build/adapt the test set-up, and analysis of the data obtained. | ||

| + | |||

| + | ''Contact: [mailto:(doppenhu@nikhef.nl) Daan Oppenhuis],[mailto:(hella.snoek@nikhef.nl) Hella Snoek],'' | ||

| + | |||

| + | ===Detector R&D: Studies of wafer-scale sensors for ALICE detector upgrade and beyond=== | ||

| + | One of the biggest milestones of the ALICE detector upgrade (foreseen in 2026) is the implementation of wafer-scale (~ 28 cm x 18 cm) monolithic silicon active pixel sensors in the tracking detector, with the goal of having truly cylindrical barrels around the beam pipe. To demonstrate such an unprecedented technology in high energy physics detectors, few chips will be soon available in Nikhef laboratories for testing and characterization purposes. | ||

| + | The goal of the project is to contribute to the validation of the samples against the ALICE tracking detector requirements, with a focus on timing performance in view of other applications in future high energy physics experiments beyond ALICE. | ||

| + | We are looking for a student with a focus on lab work and interested in high precision measurements with cutting-edge instrumentation. You will be part of the Nikhef Detector R&D group and you will have, at the same time, the chance to work in an international collaboration where you will report about the performance of these novel sensors. There may even be the opportunity to join beam tests at CERN or DESY facilities. Besides interest in hardware, some proficiency in computing is required (Python or C++/ROOT). | ||

| + | |||

| + | ''Contact: [mailto:(jory.sonneveld@nikhef.nl Jory Sonneveld]'' | ||

| − | ''Contact: [mailto: | + | ===Detector R&D: Time resolution of monolithic silicon detectors=== |

| + | Monolithic silicon detectors based on industrial Complementary Metal Oxide Semiconductor (CMOS) processes offer a promising approach for large scale detectors due to their ease of production and low material budget. Until recently, their low radiation tolerance has hindered their applicability in high energy particle physics experiments. However, new prototypes ~~such as the one in this project~~ have started to overcome these hurdles, making them feasible candidates for future experiments in high energy particle physics. In this project, you will investigate the temporal performance of a radiation hard monolithic detector prototype, that was produced end of 2023, using laser setups in the laboratory. You will also participate in meetings with the international collaboration working on this detector to present reports on the prototype's performance. A detailed investigation into different aspects of the system are to be investigated concerning their impact on the temporal resolution such as charge calibration and power consumption. Depending on the progress of the work, a first full three dimensional characterization of the prototypes performance using a state-of-the-art two-photon absorption laser setup at Nikhef and/or an investigation into irradiated samples for a closer look on the impact of radiation damage on the prototype are possible. This project is looking for someone interested in working hands on with cutting edge detector and laser systems at the Nikhef laboratory. Python programming skills and linux experience are an advantage. | ||

| + | |||

| + | ''Contact: [mailto:jory.sonneveld@nikhef.nl Jory Sonneveld], [mailto:uwe.kraemer@nikhef.nl Uwe Kraemer]'' | ||

| − | === | + | ===Detector R&D: Improving a Laser Setup for Testing Fast Silicon Pixel Detectors=== |

| − | + | For the upgrades of the innermost detectors of experiments at the Large Hadron Collider in Geneva, in particular to cope with the large number of collisions per second from 2027, the Detector R&D group at Nikhef tests new pixel detector prototypes with a variety of laser equipment with several wavelengths. The lasers can be focused down to a small spot to scan over the pixels on a pixel chip. Since the laser penetrates the silicon, the pixels will not be illuminated by just the focal spot, but by the entire three dimensional hourglass or double cone like light intensity distribution. So, how well defined is the volume in which charge is released? And can that be made much smaller than a pixel? And, if so, what would the optimum focus be? For this project the student will first estimate the intensity distribution inside a sensor that can be expected. This will correspond to the density of released charge within the silicon. To verify predictions, you will measure real pixel sensors for the LHC experiments. | |

| + | This project will involve a lot of 'hands on work' in the lab and involve programming and work on unix machines. | ||

| − | ''Contact: [mailto: | + | ''Contact: [mailto:martinfr@nikhef.nl Martin Fransen]'' |

| − | === | + | ===Detector R&D: Time resolution of hybrid pixel detectors with the Timepix4 chip=== |

| − | + | Precise time measurements with silicon pixel detectors are very important for experiments at the High-Luminosity LHC and the future circular collider. The spatial resolution of current silicon trackers will not be sufficient to distinguish the large number of collisions that will occur within individual bunch crossings. In a new method, typically referred to as 4D tracking, spatial measurements of pixel detectors will be combined with time measurements to better distinguish collision vertices that occur close together. New sensor technologies are being explored to reach the required time measurement resolution of tens of picoseconds, and the results are promising. | |

| + | However, the signals that these pixelated sensors produce have to be processed by front-end electronics, which hence play a large role in the total time resolution of the detector. The front-end electronics has many parameters that can be optimised to give the best time resolution for a specific sensor type. | ||

| + | In this project you will be working with the Timepix4 chip, which is a so-called application specific integrated circuit (ASIC) that is designed to read out pixelated sensors. This ASIC is used extensively in detector R&D for the characterisation of new sensor technologies requiring precise timing (< 50 ps). To study the time resolution you will be using laser setups in our lab, and there might be an opportunity to join a test with charged particle beams at CERN. | ||

| + | These measurements will be complemented with data from the built-in calibration-pulse mechanism of the Timepix4 ASIC. Your work will enable further research performed with this ASIC, and serve as input to the design and operation of future ASICs for experiments at the High-Luminosity LHC. | ||

| + | ''Contact: [mailto:k.heijhoff@nikhef.nl Kevin Heijhoff] and [mailto:martinb@nikhef.nl Martin van Beuzekom]'' | ||

| − | + | ===Detector R&D: Performance studies of Trench Isolated Low Gain Avalanche Detectors (TI-LGAD) === | |

| + | The future vertex detector of the LHCb Experiment needs to measure the spatial coordinates and time of the particles originating in the LHC proton-proton collisions with resolutions better than 10 um and 50 ps, respectively. Several technologies are being considered to achieve these resolutions. Among those is a novel sensor technology called Trench Isolated Low Gain Avalanche Detector. | ||

| + | Prototype pixelated sensors have been manufactured recently and have to be characterised. Therefore these new sensors will be bump bonded to a Timepix4 ASIC which provides charge and time measurements in each of 230 thousand pixels. Characterisation will be done using a lab setup at Nikhef, and includes tests with a micro-focused laser beam, radioactive sources, and possibly with particle tracks obtained in a test-beam. This project involves data taking with these new devices and analysing the data to determine the performance parameters such as the spatial and temporal resolution. as function of temperature and other operational conditions. | ||

| + | ''Contacts: [mailto:kazu.akiba@nikhef.nl Kazu Akiba] and [mailto:martinb@nikhef.nl Martin van Beuzekom]'' | ||

| − | === Detector R&D: | + | === Detector R&D: A Telescope with Ultrathin Sensors for Beam Tests=== |

| − | + | To measure the performance of new prototypes for upgrades of the LHC experiments and beyond, typically a telescope is used in a beam line of charged particles that can be used to compare the results in the prototype to particle tracks measured with this telescope. In this project, you will continue work on a very lightweight, compact telescope using ALICE PIxel DEtectors (ALPIDEs). This includes work on the mechanics, data acquisition software, and a moveable stage. You will foreseeably test this telescope in the Delft Proton Therapy Center. If time allows, you will add a timing plane and perform a measurement with one of our prototypes. Apart from travel to Delft, there is a possiblity to travel to other beam line facilities. | |

''Contact: [mailto:jory.sonneveld@nikhef.nl Jory Sonneveld]'' | ''Contact: [mailto:jory.sonneveld@nikhef.nl Jory Sonneveld]'' | ||

| + | ===Detector R&D: Laser Interferometer Space Antenna (LISA) - the first gravitational wave detector in space=== | ||

| + | |||

| + | The space-based gravitational wave antenna LISA is one of the most challenging space missions ever proposed. ESA plans to launch around 2035 three spacecraft separated by a few million kilometres. This constellation measures tiny variations in the distances between test-masses located in each satellite to detect gravitational waves from sources such as supermassive black holes. LISA is based on laser interferometry, and the three satellites form a giant Michelson interferometer. LISA measures a relative phase shift between one local laser and one distant laser by light interference. The phase shift measurement requires sensitive sensors. The Nikhef DR&D group fabricated prototype sensors in 2020 together with the Photonics industry and the Dutch institute for space research SRON. Nikhef & SRON are responsible for the Quadrant PhotoReceiver (QPR) system: the sensors, the housing including a complex mount to align the sensors with 10's of nanometer accuracy, various environmental tests at the European Space Research and Technology Centre (ESTEC), and the overall performance of the QPR in the LISA instrument. Currently we are fabricating improved sensors, optimizing the mechanics and preparing environmental tests. As a MSc student, you will work on various aspects of the wavefront sensor development: study the performance of the epitaxial stacks of Indium-Gallium-Arsenide, setting up test benches to characterize the sensors and QPR system, performing the actual tests and data analysis, in combination with performance studies and simulations of the LISA instrument. | ||

| + | Possible projects but better to contact us as the exact content may change: | ||

| + | |||

| + | #'''Title''': Simulating LISA QPD performance for LISA mission sensitivity. <br> '''Topic''': Simulation and Data Analysis. <br> '''Description''': we must provide accurate information to the LISA collaboration about the expected and actual performance of the LISA QPRs. This project will focus on using data from measurements taken at Nikhef to integrate into the simulation packages used within the LISA collaboration. The student will have the option to collect their own data to verify the simulations. Performance parameters include spatial uniformity and phase response, crosstalk and thermal response across the LISA sensitivity. <br> These simulations can then be used to investigate the full LISA performance and the impact on noise sources. This will involve simulating heterodyne signals expected on the LISA QPD and the impact on sensing techniques such as Differential Wavefront Sensing (DWS) and Tilt-to-Length (TTL) noise. Simulations tools include Finesse (Python), IFOCAD (C++) or FieldProp (MATLAB) depending on the student capabilities and preference. This work is important for understanding the stability and noise of LISA interferometry will perform during real operation in space. | ||

| + | #'''Title''': Investigate the Response of the Gap in the LISA QPD. <br> '''Topic''': Experimental. <br> '''Description''': At Nikhef we are developing the photodiodes that will be used in the upcoming ESA/NASA LISA mission. We currently have our first batch of Quadrant Photodiodes (QPDs) that vary in diameter, thickness and gaps width between the quadrants. The goal of this project is to develop a free-space laser test set-up to measure the response of the gap between the quadrants of the LISA Quadrant Photodiode (QPD). It is important to understand the behaviour of the gap between the photodiode quadrants since this can impact the overall performance of the photodiode and thus the sensitivity of LISA. <br> The measurements will involve characterising the test laser beam, configuring test equipment, handling and installing optical components. Furthermore, as well as taking the data, the student will also be responsible for analysing the results using Python however other computer languages are acceptable (based on the student preference). | ||

| + | #'''Title''': Investigate the Response of LISA QPDs for Einstein Telescope Pathfinder. <br> '''Topic''': Experimental. <br> '''Description''': Current gravitational wave (GW) interferometers typically operate using 1064 nm wavelengths. However, future GW detectors will operate at higher wavelengths such as 1550 nm or 2000 nm. As a result of the wavelength change, much of the current technology is unsuitable thus, developments are underway for the next generation GW detectors. Europe’s future GW detector, the Einstein Telescope, is currently in its’ infancy. A smaller scale prototype, known as ET pathfinder, is currently being built and serves as a test bench for the full scale detector. <br> At Nikhef’s R&D group, we want to develop quadrant photodiodes (QPDs) that sense the light from the interferometer light for the Einstein Telescope (ET) and ET Pathfinder. These QPDs require very low noise performance as well as high sensitivity in order to measure the small interferometer signals. To that end, out first step is to use the current QPDs that have been developed for the ESA/NASA LISA mission. <br> This project will focus on performance tests of the LISA QPDs using a 1550 nm. The student will be tasked with developing a test setup as well as taking the data and analysing the results. As part of this project, the student will learn about laser characterisation, gaussian optics and instrumentation techniques. These results will be important for designing the next generation QPDs and is of interest to the ET consortium, where the student can present their results. | ||

| + | |||

| + | |||

| + | ''Contact: [mailto:nielsvb@nikhef.nl Niels van Bakel] or [mailto:tmistry@nikhef.nl Timesh Mistry]'' | ||

| − | === Detector R&D: | + | ===Detector R&D: Other projects=== |

| − | + | Are you looking for a slightly different project? Are the above projects already taken? Are you coming in at an unusual time of the year? Do not hesitate to contact us! We always have new projects coming up at different times in the year and we are open to your ideas. | |

''Contact: [mailto:jory.sonneveld@nikhef.nl Jory Sonneveld]'' | ''Contact: [mailto:jory.sonneveld@nikhef.nl Jory Sonneveld]'' | ||

| − | === | + | ===Gravitational Waves: Computer modelling to design the laser interferometers for the Einstein Telescope=== |

| − | The | + | |

| + | A new field of instrument science led to the successful detection of gravitational waves by the LIGO detectors in 2015. We are now preparing the next generation of gravitational wave observatories, such as the Einstein Telescope, with the aim to increase the detector sensitivity by a factor of ten, which would allow, for example, to detect stellar-mass black holes from early in the universe when the first stars began to form. This ambitious goal requires us to find ways to significantly improve the best laser interferometers in the world. | ||

| + | |||

| + | Gravitational wave detectors complex Michelson-type interferometers enhanced with optical cavities. We develop and use numerical models to study these laser interferometers, to invent new optical techniques and to quantify their performance. For example, we synthesize virtual mirror surfaces to study the effects of higher-order optical modes in the interferometers, and we use opto-mechanical models to test schemes for suppressing quantum fluctuations of the light field. We can offer several projects based on numerical modelling of laser interferometers. All projects will be directly linked to the ongoing design of the Einstein Telescope. | ||

| + | |||

| + | ''Contact: [mailto:a.freise@nikhef.nl Andreas Freise]'' | ||

| + | |||

| + | ===Gravitational-Waves: Get rid of those damn vibrations!=== | ||

| + | In 2015 large scale, precision interferometry led to the detection of gravitational-waves. In 2017 Europe’s Advanced Virgo detector joined this international network and the best studied astrophysical event in history, GW170817, was detected in both gravitational waves and across the electromagnetic spectrum. | ||

| + | |||

| + | The Nikhef gravitational wave group is actively contributing to improvements towards current gravitational-wave detectors and the rapidly maturing design for Europe’s next generation of gravitational-wave observatory, Einstein Telescope, with one of two candidate sites located in the Netherlands. These detectors will unveil the gravitational symphony of the dark universe out to cosmological distances. Breaking past the sensitivity achieved by the current observatories will require a radically new approach to core components of these state of the art machines. This is especially true at the lowest, audio-band, frequencies that the Einstein Telescope is targeting where large improvements are needed. | ||

| + | |||

| + | Our project, Omnisens, brings the techniques from space based satellite control back to Earth building a platform capable of actively cancelling ground vibrations to levels never reached in the past. This is realised with state of the art compact interferometric sensors and precision mechanics. Substantial cancellation of seismic motion is an essential improvement for the Einstein Telescope, to reach below attometer (10<sup>-18</sup> m) displacements. | ||

| + | |||

| + | We are excited to offer two projects in 2024: | ||

| + | |||

| + | #You will experimentally demonstrate and optimise Omnisens’ novel vibration isolation for future deployment on the Einstein Telescope. The activity will involve hands-on experience with laser, electronics mechanical and high-vacuum systems. | ||

| + | # You will contribute to the design of the Einstein Telescope by modelling the coupling of seismic and technical noises (such as actuation and sensing noises) through different configurations of seismic actuation chains. An accurate modelling of the origin and transmission of those noises is crucial in designing a system that prevents them from limiting the interferometer’s readout. | ||

| + | |||

| + | Contact: [mailto:c.m.mow-lowry@vu.nl Conor Mow-Lowry] | ||

| + | |||

| + | ===Gravitational Waves: Signal models & tools for data analysis === | ||

| + | Theoretical predictions of gravitational-wave (GW) signals provide essential tools to detect and analyse transient GW events in the data of GW instruments like LIGO and Virgo. Over the last few years, there has been significant effort to develop signal models that accurately describe the complex morphology of GWs from merging neutron-star and black-hole binaries. Future analyses of Einstein Telescope (ET) data will need to tackle much longer and louder compact binary signals, which will require significant developments beyond the current status quo of GW modeling (i.e., improvements in model accuracy and computational efficiency, increased parameter space coverage, ...) | ||

| + | |||

| + | We can offer up to two projects: in GW signal modeling (at the interface of perturbation theory, numerical relativity simulations and fast phenomenological descriptions), as well as developing applications of signal models in GW data analysis. Although not strictly required, prior knowledge of basic concepts of general relativity and/or GW theory will be helpful. Some proficiency in computing is required (Mathematica, Python or C++). | ||

| + | |||

| + | ''Contact: [mailto:mhaney@nikhef.nl Maria Haney]'' | ||

| + | |||

| + | === Theoretical Particle Physics: High-energy neutrino physics at the LHC=== | ||

| + | High-energy collisions at the LHC and its High-Luminosity upgrade (HL-LHC) produce a large number of particles along the beam collision axis, outside of the acceptance of existing experiments. The FASER experiment has in 2023, for the first team, detected neutrinos produced in LHC collisions, and is now starting to elucidate their properties. In this context, the proposed Forward Physics Facility (FPF) to be located several hundred meters from the ATLAS interaction point and shielded by concrete and rock, will host a suite of experiments to probe Standard Model (SM) processes and search for physics beyond the Standard Model (BSM). High statistics neutrino detection will provide valuable data for fundamental topics in perturbative and non-perturbative QCD and in weak interactions. Experiments at the FPF will enable synergies between forward particle production at the LHC and astroparticle physics to be exploited. The FPF has the promising potential to probe our understanding of the strong interactions as well as of proton and nuclear structure, providing access to both the very low-x and the very high-x regions of the colliding protons. The former regime is sensitive to novel QCD production mechanisms, such as BFKL effects and non-linear dynamics, as well as the gluon parton distribution function (PDF) down to x=1e-7, well beyond the coverage of other experiments and providing key inputs for astroparticle physics. In addition, the FPF acts as a neutrino-induced deep-inelastic scattering (DIS) experiment with TeV-scale neutrino beams. The resulting measurements of neutrino DIS structure functions represent a valuable handle on the partonic structure of nucleons and nuclei, particularly their quark flavour separation, that is fully complementary to the charged-lepton DIS measurements expected at the upcoming Electron-Ion Collider (EIC). | ||

| + | |||

| + | In this project, the student will carry out updated predictions for the neutrino fluxes expected at the FPF, assess the precision with which neutrino cross-sections will be measured, develop novel monte carlo event generation tools for high-energy neutrino scattering, and quantify their impact on proton and nuclear structure by means of machine learning tools within the NNPDF framework and state-of-the-art calculations in perturbative Quantum Chromodynamics. This project contributes to ongoing work within the FPF Initiative towards a Conceptual Design Report (CDR) to be presented within two years. Topics that can be considered as part of this project include the assessment of to which extent nuclear modifications of the free-proton PDFs can be constrained by FPF measurements, the determination of the small-x gluon PDF from suitably defined observables at the FPF and the implications for ultra-high-energy particle astrophysics, the study of the intrinsic charm content in the proton and its consequences for the FPF physics program, and the validation of models for neutrino-nucleon cross-sections in the region beyond the validity of perturbative QCD. | ||

| + | |||

| + | References: https://arxiv.org/abs/2203.05090, https://arxiv.org/abs/2109.10905 ,https://arxiv.org/abs/2208.08372 , https://arxiv.org/abs/2201.12363 , https://arxiv.org/abs/2109.02653, https://github.com/NNPDF/ see also this [https://www.dropbox.com/s/30co188f1almzq2/rojo-GRAPPA-MSc-2023.pdf?dl=0 project description]. | ||

| + | |||

| + | ''Contacts: [mailto:j.rojo@vu.nl Juan Rojo]'' | ||

| + | ===Theoretical Particle Physics: Unravelling proton structure with machine learning === | ||

| + | At energy-frontier facilities such as the Large Hadron Collider (LHC), scientists study the laws of nature in their quest for novel phenomena both within and beyond the Standard Model of particle physics. An in-depth understanding of the quark and gluon substructure of protons and heavy nuclei is crucial to address pressing questions from the nature of the Higgs boson to the origin of cosmic neutrinos. The key to address some of these questions is carrying out a global analysis of nucleon structure by combining an extensive experimental dataset and cutting-edge theory calculations. Within the NNPDF approach, this is achieved by means of a machine learning framework where neural networks parametrise the underlying physical laws while minimising ad-hoc model assumptions. In addition to the LHC, the upcoming Electron Ion Collider (EIC), to start taking data in 2029, will be the world's first ever polarised lepton-hadron collider and will offer a plethora of opportunities to address key open questions in our understanding of the strong nuclear force, such as the origin of the mass and the intrinsic angular momentum (spin) of hadrons and whether there exists a state of matter which is entirely dominated by gluons. | ||

| + | |||

| + | In this project, the student will develop novel machine learning and AI approaches aimed to improve global analyses of proton structure and better predictions for the LHC, the EIC, and astroparticle physics experiments. These new approaches will be implemented within the machine learning tools provided by the NNPDF open-source fitting framework and use state-of-the-art calculations in perturbative Quantum Chromodynamics. Techniques that will be considered include normalising flows, graph neural networks, gaussian processes, and kernel methods for unsupervised learning. Particular emphasis will be devoted to the automated determination of model hyperparameters, as well as to the estimate of the associated model uncertainties and their systematic validation with a battery of statistical tests. The outcome of the project will benefit the ongoing program of high-precision theory predictions for ongoing and future experiments in particle physics. | ||

| + | |||

| + | References: https://arxiv.org/abs/2201.12363, https://arxiv.org/abs/2109.02653 , https://arxiv.org/abs/2103.05419, https://arxiv.org/abs/1404.4293 , https://inspirehep.net/literature/1302398, https://github.com/NNPDF/ see also this [https://www.dropbox.com/s/30co188f1almzq2/rojo-GRAPPA-MSc-2023.pdf?dl=0 project description]. | ||

| + | |||

| + | ''Contacts: [mailto:j.rojo@vu.nl Juan Rojo]'' | ||

| + | |||

| + | === Theoretical Particle Physics: Sterile neutrino dark matter=== | ||

| + | |||

| + | The existence of right-handed (sterile) neutrinos is well motivated, as all other Standard Model particles come in both chiralities, and moreover, they naturally explaine the small masses of the left-handed (active) neutrinos. If the lightest sterile neutrino is very long lived, it could be dark matter. Although they can be produced by neutrino oscillations in the early universe, this is not efficient enough to explain all dark matter. It has been proposed that additional self-interactions between sterile neutrinos can solve this (https://arxiv.org/abs/2307.15565, see also the more recent https://arxiv.org/abs/2402.13878). In this project you would examine whether the additional field mediating the self-interactions can also explain the neutrino masses. As a first step you would reproduce the results in the literature, and then extend it to map out the range of masses possible for this extra field. | ||

| + | |||

| + | ''Contacts: [Mailto:mpostma@nikhef.nl Marieke Postma]'' | ||

| + | |||

| + | ===Theoretical Particle Physics: Baryogenesis at the electroweak scale=== | ||

| + | |||

| + | Given that the Standard Model treats particle and antiparticles nearly the same, it is a puzzle why there is no antimatter left in our universe. | ||

| + | Electroweak baryogenesis is the proposal that the matter-antisymmetry is created during the phase transtion during which the Higgs field obtains a vev and the electroweak symmetry is broken. One important ingredient in this scenario is that there are new charge and parity (CP) violating interactions. However, this is strongl constrained by the very precise measurements of the electric dipole moment of the electron. An old proposal, that was recently revived, is to use a CP violating coupling of the Higgs field to the gauge field (https://arxiv.org/abs/2307.01270, https://inspirehep.net/literature/300823). The project would be to study the efficacy of these kind of operators for baryogenesis. | ||

| + | |||

| + | ''Contacts: [Mailto:mpostma@nikhef.nl Marieke Postma]'' | ||

| + | |||

| + | ==='''Theoretical Particle Physics: Neutrinoless double beta decay with sterile neutrinos'''=== | ||

| + | Search for neutrinoless double beta decay represents a prominent probe of new particle physics and is very well motivated by its tight connection to neutrino masses, which, so far, lack an experimentally verified explanation. As such, it also provide a convenient probe of new interactions of the known elementary particles with hypothesized right-handed neutrinos that are thought to play a prime role in the neutrino mass generation. The main focus of this project would be the extension of NuDoBe, a Python tool for the computation of neutrinoless double beta decay (0vbb) rates in terms of lepton-number-violating operators in the Standard Model Effective Field Theory (SMEFT), see <nowiki>https://arxiv.org/abs/2304.05415</nowiki>. In the first step, the code should be expanded to include also the effective operators involving right-handed neutrinos based on the existing literature (<nowiki>https://arxiv.org/abs/2002.07182</nowiki>) covering the general rate of neutrinoless double beta decay within extended by right-handed neutrinos. Besides that, additional functionalities could be added to the code, such as a routine for extraction of the explicit form of a neutrino mass and mixing matrices, etc. This work would be very useful for future phenomenological studies and particularly timely given the ongoing experimental efforts, which are to be further boosted by the upcoming tonne-scale upgrades of the double-beta experiments. | ||

| + | |||

| + | ''Contacts: [Mailto:j.devries4@uva.nl Jordy de Vries] and Lukas Graf'' | ||

| + | |||

| + | ==='''Theoretical Particle Physics: Phase space factors for single, double, and neutrinoless beta-decay rates.'''=== | ||

| + | In light of the increasingly precise measurements of beta-decay and double-beta-decay rates and spectra, the theoretical predictions seem to fall behind. The existing, rather phenomenological approaches to the associated phase-space calculations employ a variety of different approximations introducing errors that are, given their phenomenological nature, not easily quantifiable. A key goal of this project is to understand, reproduce and improve the methods and results available in the literature. Ideally, these efforts would be summarized in form of a compact Mathematica notebook or Python package available to the broad community of beta-decay experimentalists and phenomenologists that could easily implement it in the workflows of their analyses. The focus should be not only on the Standard-Model contributions to (double) beta decay, but also on hypothetical exotic modes stemming from various beyond-the-Standard-Model scenarios (see e.g.\ <nowiki>https://arxiv.org/abs/nucl-ex/0605029</nowiki> and <nowiki>https://arxiv.org/abs/2003.11836</nowiki>). If time permits, then new, more particle-physics based approaches to the phase-space computations can be investigated. | ||

| + | |||

| + | ''Contacts: [Mailto:j.devries4@uva.nl Jordy de Vries] and Lukas Graf'' | ||

| + | ==='''Theoretical Particle Physics''': Predictions for Charge Particle Tracks from First Principles=== | ||

| + | Measurements based on tracks of charged particles benefit from superior angular resolution. This is essential for a new class of observables called energy correlators, for which a range of interesting applications have been identified: studying the [https://arxiv.org/abs/2201.07800 confinement transition], measuring the [https://arxiv.org/abs/2201.08393 top quark mass] more precisely, etc. I developed a [https://arxiv.org/abs/1303.6637 framework] for calculating track-based observables, in which the conversion of quarks and gluons to charged hadrons is described by track functions. This generalization of the well-studied parton distribution functions and fragmentation functions is currently being measured by ATLAS, though the data is not public yet. Interestingly, two groups proposed predicting fragmentation functions from first principles in recent years (https://arxiv.org/abs/2010.02934, https://arxiv.org/abs/2301.09649). In this project you would extend one (or both) approaches to obtain a prediction for the track function. | ||

| + | |||

| + | ''Contacts: [Mailto:w.j.waalewijn@uva.nl Wouter Waalewijn]'' | ||

| + | |||

| + | ===Neutrinos: Neutrino Oscillation Analysis with the KM3NeT/ORCA Detector=== | ||

| + | The KM3NeT/ORCA neutrino detector at the bottom of the Mediterranean Sea is able to detect oscillations of atmospheric neutrinos. Neutrinos traversing the detector are reconstructed as a function of two observables: the neutrino energy and the neutrino direction. In order to improve the neutrino oscillation analysis, we need to add one more observable, the so-called Björken-y, that indicates the fraction of the energy transferred from the incoming neutrino to its daughter particle. For this project, we will study simulated and real reconstructed data and use those to implement this additional observable in the existing analysis framework. Subsequently, we will study how much the sensitivity of the final analysis improves as a result. | ||

| + | |||

| + | C++ and Python programming skills are advantageous. | ||

| + | |||

| + | ''Contacts:'' [mailto:dveijk@nikhef.nl Daan van Eijk], [mailto:h26@nikhef.nl Paul de Jong] | ||

| + | |||

| + | ===Neutrinos: Searching for neutrinos of cosmic origin with KM3NeT=== | ||

| + | KM3NeT is a neutrino telescope under construction in the Mediterranean Sea, already taking data with the first deployed detection units. In particular the KM3NeT/ARCA detector off-shore of Sicily is designed for high-energy neutrinos and is suited for the detection of neutrinos of cosmic origin. In this project we will use the first KM3NeT data to search for evidence of a cosmic neutrino source, and also study ways to improve the analysis. | ||

| + | |||

| + | ''Contact:'' [mailto:aart.heijboer@nikhef.nl Aart Heijboer] | ||

| + | |||

| + | ===Neutrinos: the Deep Underground Neutrino Experiment (DUNE) === | ||

| + | |||

| + | The Deep Underground Neutrino Experiment (DUNE) is under construction in the USA, and will consist of a powerful neutrino beam originating at Fermilab, a near detector at Fermilab, and a far detector in the SURF facility in Lead, South Dakota, 1300 km away. During travelling, neutrinos oscillate and a fraction of the neutrino beam changes flavour; DUNE will determine the neutrino oscillation parameters to unrivaled precision, and try and make a first detection of CP-violation in neutrinos. In this project, various elements of DUNE can be studied, including the neutrino oscillation fit, neutrino physics with the near detector, event reconstruction and classification (including machine learning), or elements of data selection and triggering. | ||

| + | |||

| + | ''Contact:'' [mailto:h26@nikhef.nl Paul de Jong] | ||

| + | |||

| + | ===Neutrinos: Searching for Majorana Neutrinos with KamLAND-Zen=== | ||

| + | The KamLAND-Zen experiment, located in the Kamioka mine in Japan, is a large liquid scintillator experiment with 750kg of ultra-pure Xe-136 to search for neutrinoless double-beta decay (0n2b). The observation of the 0n2b process would be evidence for lepton number violation and the Majorana nature of neutrinos, i.e. that neutrinos are their own anti-particles. Current limits on this extraordinary rare hypothetical decay process are presented as a half-life, with a lower limit of 10^26 years. KamLAND-Zen, the world’s most sensitive 0n2b experiment, is currently taking data and there is an opportunity to work on the data analysis, analyzing data with the possibility of taking part in a ground-breaking discovery. The main focus will be on developing new techniques to filter the spallation backgrounds, i.e. the production of radioactive isotopes by passing muons. There will be close collaboration with groups in the US (MIT, Berkeley, UW) and Japan (Tohoku Univ). | ||

| + | |||

| + | ''Contact: [mailto:decowski@nikhef.nl Patrick Decowski]'' | ||

| + | |||

| + | |||

| + | ===Neutrinos: TRIF𝒪RCE (PTOLEMY)=== | ||

| + | |||

| + | The PTOLEMY demonstrator will place limits on the neutrino mass using the ''β-''decay endpoint of atomic tritium. The detector will require a CRES-based (cyclotron radiation emission spectroscopy) trigger and a non-destructive tracking system. The "''TRItium-endpoint From 𝒪(fW) Radio-frequency Cyclotron Emissions"'' group is developing radio-frequency cavities for the simultaneous transport of endpoint electrons and the extraction of their kinematic information. This is essential to providing a fast online trigger and precise energy-loss corrections to electrons reconstructed near the tritium endpoint. The cryogenic low-noise, high-frequency analogue electronics developed at Nikhef combined with FPGA-based front-end analysis capabilities will provide the PTOLEMY demonstrator with its CRES readout and a testbed to be hosted at the Gran Sasso National Laboratory for the full CνB detector. The focus of this project will be modelling CR in RF cavities and its detection for the purposes of single electron spectroscopy and optimised trajectory reconstruction for prototype and demonstrator setups. This may extend to firmware-based fast tagging and reconstruction algorithm development with the RF-SoC. | ||

| + | |||

| + | ''Contact: [mailto:jmead@nikhef.nl James Vincent Mead]]'' | ||

| + | |||

| + | ===Cosmic Rays: Energy loss profile of cosmic ray muons in the KM3NeT neutrino detector === | ||

| + | The dominant signal in the KM3NeT detectors are not neutrinos, but muons created in particle cascades -extensive air-showers- initiated when cosmic rays interact in the top of the atmosphere. While these muons are a background for neutrino studies, they present an opportunity to study the nature of cosmic rays and hadronic interactions at the highest energies. Reconstruction algorithms are used to determine the properties of the particle interactions, normally of neutrinos, from the recorded photons. The aim of this project is to explore the possibility to reconstruct the longitudinal energy loss profile of single and multiple simultaneous muons ('bundles') originating from cosmic ray interactions. The potential to use this energy loss profile to extract information on the amount of muons and the lateral extension of the muon 'bundles' will also be explored. These properties allow to extract information on the high-energy interactions of cosmic rays. | ||

| + | |||

| + | ''Contact: [mailto:rbruijn@nikhef.nl Ronald Bruijn]'' | ||

| + | |||

| + | ===LHCb: Search for light dark particles=== | ||

| + | The Standard Model of elementary particles does not contain a proper Dark Matter candidate. One of the most tantalizing theoretical developments is the so-called ''Hidden Valley models'': a mirror-like copy of the ''Standard Model'', with dark particles that communicate with standard ones via a very feeble interaction. These models predict the existence of ''dark hadrons'' – composite particles that are bound similarly to ordinary hadrons in the ''Standard Model''. Such ''dark hadrons''can be abundantly produced in high-energy proton-proton collisions, making the LHC a unique place to search for them. Some ''dark hadrons'' are stable like a proton, which makes them excellent ''Dark Matter'' candidates, while others decay to ordinary particles after flying a certain distance in the collider experiment. The LHCb detector has a unique capability to identify such decays, particularly if the new particles have a mass below ten times the proton mass. | ||

| + | |||

| + | This project assumes a unique search for light ''dark hadrons'' that covers a mass range not accessible to other experiments. It assumes an interesting program on data analysis (python-based) with non-trivial machine learning solutions and phenomenology research using fast simulation framework. Depending on the interest, there is quite a bit of flexibility in the precise focus of the project. | ||

| + | |||

| + | ''Contact: [[Mailto:andrii.usachov@nikhef.nl Andrii Usachov]]'' | ||

| + | |||

| + | ===LHCb: Searching for dark matter in exotic six-quark particles=== | ||

| + | |||

| + | Three quarters of the mass in the Universe is of unknown type. Many hypotheses about this dark matter have been proposed, but none confirmed. Recently it has been proposed that it could be made of particles made of the six quarks uuddss, which would be a Standard-Model solution to the dark matter problem. This idea has recently gained credibility as many similar multi-quarks states are being discovered by the LHCb experiment. Such a particle could be produced in decays of heavy baryons, or directly in proton-proton collisions. The anti-particle, made of six antiquarks, could be seen when annihilating with detector material. It is also proposed to use Xi_b baryons produced at LHCb to search for such a state where the state would appear as missing 4-momentum in a kinematically constrained decay. The project consists in defining a selection and applying it to LHCb data. See [https://arxiv.org/abs/2007.10378 arXiv:2007.10378]. | ||

| + | |||

| + | Contact: ''[mailto:patrick.koppenburg@cern.ch Patrick Koppenburg]'' | ||

| + | |||

| + | |||

| + | ===LHCb: New physics in the angular distributions of B decays to K*ee=== | ||

| + | |||

| + | Lepton flavour violation in B decays can be explained by a variety of non-standard model interactions. Angular distributions in decays of a B meson to a hadron and two leptons are an important source of information to understand which model is correct. Previous analyses at the LHCb experiment have considered final states with a pair of muons. Our LHCb group at Nikhef concentrates on a new measurement of angular distributions in decays with two electrons. The main challenge in this measurement is the calibration of the detection efficiency. In this project you will confront estimates of the detection efficiency derived from simulation with decay distributions in a well known B decay. Once the calibration is understood, the very first analysis of the angular distributions in the electron final state can be performed. | ||

| + | |||

| + | Contact: [mailto:m.senghi.soares@nikhef.nl Mara Soares] and [mailto:wouterh@nikhef.nl Wouter Hulsbergen] | ||

| + | |||

| + | ===LHCb: CP violation in B -> J/psi Ks decays with first run-3 data=== | ||

| + | |||

| + | The decay B -> J/psi Ks is the `golden channel' for measuring the CP violating angle beta in the CKM matrix. In this project we will use the first data from the upgraded LHCb detector to perform this measurement. Performing such a measurement with a new detector is going to be very challenging: We will learn a lot about whether the the upgraded LHCb will perform as good as expected. | ||

| + | |||

| + | Contact: ''[[mailto:wouterh@nikhef.nl Wouter Hulsbergen]]'' | ||

| + | |||

| + | |||

| + | ===LHCb: Optimization of primary vertex reconstruction=== | ||

| + | A key part of the LHCb event classification is the reconstruction of the collision point of the protons from the LHC beams. This so-called primary vertex is found by constructing the origin of the charged particles found in the detector. A rudimentary algorithm exists, but it is expected that its performance can be improved by tuning parameters (or perhaps implementing an entirely new algorithm). In this project you are challenged to optimize the LHCb primary vertex reconstruction algorithm using recent simulated and real data from LHC run-3. | ||

| + | |||

| + | Contact: ''[[mailto:wouterh@nikhef.nl Wouter Hulsbergen]]'' | ||

| + | |||

| + | ===LHCb: Measurement of B decays to two electrons=== | ||

| + | Instead of searching for new physics by direct production of new particles, one can search for enhancements in very rare processes as an indirect signal for the existence of new particles or forces. The observed decay of Bs to two muons by the LHCb collaboration and Nikhef/Maastricht is such a measurement, and as rarest decay ever observed at the LHC it has a large impact on the new physics landscape. In this project, we will extend this work by searching for the even rarer decay into two electrons. You would join the ongoing work in context of an NWO Veni grant, and can be based in Maastricht or Nikhef. | ||

| + | |||

| + | Contact: ''[[mailto:jdevries@nikhef.nl Jacco de Vries]]'' | ||

| + | |||

| + | ===Muon Collider=== | ||

| + | There is currently a lively global debate about the next accelerator to succeed the successful LHC. Different options are on the table: linear, circular, electrons, protons, on various continents... Out of these, the most ambitious project is the muon collider, designed to collide the relatively massive (105 MeV) but relatively short-living (2.2 μs!) leptons. Such a novel collider would combine the advantages of electron-positron colliders (excellent precision) and proton-proton colliders (highest energy). In this project, we'll perform a feasibility study for the search of the elusive Double-Higgs process: this yet unobserved process is crucial to probe the simultaneous interaction of multiple Higgs bosons and thereby the shape of the Higgs potential as predicted in the Brout-Englert-Higgs mechanism. This sensitivity study will be instrumental to understand one of the main scientific prospects for this ambitious project, and also to optimize the detector design, as well as the interface of the particle detectors to the accelerator machine. The project is based at Nikhef but can also be (partially) performed at University of Twente. | ||

| + | |||